Define a load test

A load test is a test designed to measure an application's behavior under normal and peak conditions. You add and configure scripts, monitors, and SLAs to a load test definition.

Get started

From the Load tests page, you can select a project or create and edit a test.

| Action | How to |

|---|---|

|

Select a project |

Select a project from the dropdown in the banner. |

| Create a test |

To create a test, perform one of the following steps:

|

| Edit a test |

|

| Duplicate a test |

Click Note: This feature requires the test name to be comprised of 120 characters or less. |

| Give your test a label |

Organize your tests by assigning them labels. For details, see Assign labels. |

| View test settings |

Use the left or right facing arrows in the top right to show or hide the Summary pane. This pane shows information about the last 5 runs, and lists test settings such as the number of Vusers per type, the duration, and the test ID. Tip: You can resize the pane by dragging its border. |

Navigation panel

After selecting or creating a test, the Load tests navigation panel on the left provides the following options:

|

|

Test settings. Configure basic, run configuration, log, and load generator settings. For details, see Define test settings. |

|

|

Load profile. Show a list of the scripts and lets you set a script schedule. For details, see Configure a schedule for your script and Manage scripts. |

|

|

Load distribution. Choose the load generator machines for your Vusers. Optionally, assign scripts and the number of Vusers for on-premises load generators. For details, see Configure cloud load generator locations or Configure on-premises load generator locations. |

|

|

SLA. Show the SLA for the test. For details, see Configure SLAs. |

|

|

Single user performance. Collect client side breakdown data. For details, see Generate single user performance data. |

|

|

Rendezvous. Set up a rendezvous for your Vusers. For details, see Configure rendezvous settings. Note: This tab is only displayed if a script containing rendezvous points is included in a load test. |

|

|

Monitors. Show a list of monitors. For details, see Add monitors to your load test. |

|

|

Streaming agent. Show the streaming agent for the test. For details, see Data streaming. (This option is only visible when data streaming was enabled for your tenant via a service request.) |

|

|

Disruption events. Add disruption events to the load test. For details, see Configure disruption events. |

|

|

Test scheduler. Set a schedule for the test run. For details, see Schedule a test run. |

|

|

Runs. Opens the Runs pane listing the runs for the selected test along with run statistics such as run status, regressions, and failed transactions. For details, see View test run status. |

|

|

Trends. For details, see Trends. |

|

|

History. Lists the changes made to the load test, along with a timestamp and the user who made the changes. For example, you can determine who made changes to the test's settings, who modified the script, and who changed the ramp up time. The following are not included in the history:

|

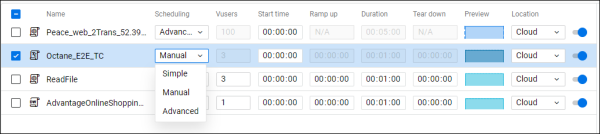

Configure a schedule for your script

In the Load profile page, you can configure a schedule for each script according to the run mode configured for the load test.

| Run mode | Scheduling mode |

|---|---|

| Duration | There are three modes of script schedules that you can select: Simple, Manual, or Advanced. |

| Iteration | Simple mode only. |

| Goal Oriented | No scheduling options are available. |

You can configure the basic settings for all scripts (see Basic settings), and various other settings depending on the scheduling mode that you selected. For details, see Simple mode settings, Manual mode settings, and Advanced mode settings.

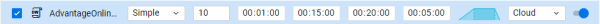

To configure the scheduling mode settings, click the Scheduling dropdown arrow adjacent to a script, and select Simple, Manual, or Advanced.

You can customize the settings for each script either in the table columns, or in the schedule profile pane to the right, depending on which scheduling mode is selected. The visible columns also change depending on the Run mode selected in the Test settings options (Duration, Iterations, or Goal Oriented).

You then configure additional settings in the schedule profile pane to the right.

A graph displays a visual representation of the script schedule showing the number of Vusers, duration, ramp up, and tear down. (A smaller version of the graph is also displayed in the row of the selected script.)

Simple mode is the default schedule mode, and enables you to configure a time during which the Vusers are started or stopped, at a consistent rate. For example, if you configure ten Vusers for the script and a ramp up time of ten minutes, one Vuser is started each minute.

Configure the following additional settings directly in the row of the selected script:

| Action | How to |

|---|---|

| Define the ramp up |

Specify the ramp up time to start Vusers at a consistent rate.

Note: For TruClient Native Mobile scripts, only one Vuser runs, so there is no ramp up. |

| Define the tear down |

Specify the tear down time to stop Vusers at a consistent rate.

Note: For TruClient Native Mobile scripts, only one Vuser runs, so there is no tear down. |

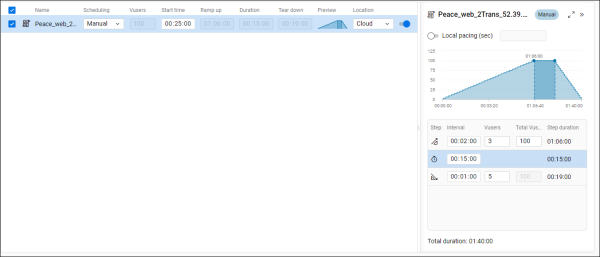

Note: The Manual scheduling mode is only enabled for load tests configured to run in Duration mode.

Manual mode enables you to simulate a slightly more complex scenario by configuring the ramp up and tear down values for a specific number of users in time intervals. For example, you can configure three Vusers to be started every two minutes until all the Vusers for the test have been started.

Select Manual scheduling mode, and configure the following additional settings in the schedule profile pane:

| Action | How to |

|---|---|

| Define the ramp up, the rate at which Vusers are added. |

Specify how many Vusers are added and at what time intervals.

|

| Define the tear down, the pace at which Vusers are stopped. |

Specify how many Vusers are stopped and in what time intervals.

|

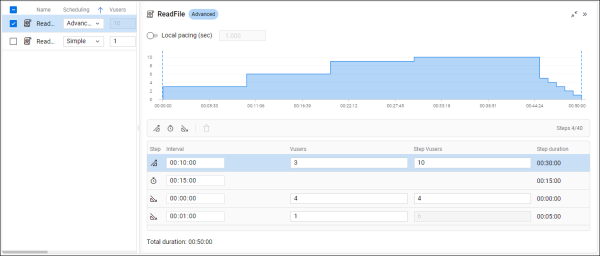

Note: The Advanced scheduling mode is only enabled for load tests configured to run in Duration mode.

The Advanced mode enables you to configure a schedule that more accurately reflects a real life scenario, with multiple instances of Vusers starting and stopping during the test.

Select Advanced scheduling mode. In the schedule profile pane, configure multiple steps using the following settings:

| Action | How to |

|---|---|

| Define the ramp up, the rate at which Vusers are added. |

Click Add ramp up to add a Ramp Up step and specify how many Vusers are added and in what time intervals.

For example, you can configure two Vusers to be started every four minutes until a total of twelve Vusers have been started. |

|

Set a duration |

Click Add duration to add a Duration step and specify the time the test runs before executing the next scheduling step. Duration does not include ramp up and tear down times. |

| Define the tear down, the pace at which Vusers are stopped. |

Click Add tear down to add a Tear Down step and specify how many Vusers are stopped and in what time intervals.

For example, you can configure three Vusers to be stopped every two minutes until a total of nine Vusers have been stopped. |

Note:

-

Advanced mode is unavailable for:

-

Scripts in a load test configured for the iterations run mode.

-

TruClient Native Mobile scripts.

-

Scripts with the Test settings > Add Vusers option selected. For details, see Define test settings.

-

-

The first step must be a Ramp up.

-

The last step must be a Tear down.

-

The schedule must include at least one Ramp up step, one Duration step, and one Tear down step.

-

You can include a maximum of forty steps in the schedule.

For any of the schedule modes, you can configure the following additional settings:

| Action | How to |

|---|---|

| Set pacing |

Controls the time between iterations. The pace tells the Vuser how long to wait between iterations of your actions. You can control pacing either from the Load test > Load profile tab or from Runtime settings > Pacing. To set the pacing in the Load test > Load profile tab:

For LoadRunner scripts, if your script contains fixed pacing, pacing defaults to the script value. You can manually change the value. To set the pacing using runtime settings, Open the runtime settings. Note: If local pacing is turned off, the runtime settings values are used by default. |

Configure a goal for a load test

You can configure a load test to run in Goal Oriented mode. If you select this option, you configure a goal for the test and when the test runs, it continues until the goal is reached. For more details, see How LoadRunner Cloud works with goals below.

To configure a goal oriented test:

-

In the load test's Test settings page, select Run Mode > Goal Oriented. For details, see Run mode.

-

In the load test's Load profile page, configure the % of Vusers and Location for each script. For details, see Configure a schedule for your script.

-

In the load test's Load profile page, click the Goal settings button. In the Goal Settings dialog box that opens, configure the following:

Setting Description Goal type Select the goal type:

- Hits per second. The number of hits (HTTP requests) to the Web server per second.

- Transactions per second. The number of transactions completed per second. Only passed transactions are counted.

Transaction name If you selected Transactions per second as the goal type, select or input the transaction to use for the goal. Goal value Enter the number of hits per second or transactions per second to be reached, for the goal to be fulfilled. Vusers Enter the minimum and maximum number of Vusers to be used in the load test. Ramp up Select the ramp up method and time. This determines the amount of time in which the goal must be reached.

-

Ramp up automatically with a maximum duration of. Ramp up Vusers automatically to reach the goal as soon as possible. If the goal can’t be reached after the maximum duration, the ramp up stops.

-

Reach target number of hits or transactions per second <time> after test is started. Ramp up Vusers to try to reach the goal in the specified duration.

-

Step up N hits or transactions per second every. Set the number of hits or transactions per second that are added and at what time interval to add them. Hits or transactions per second are added in these increments until the goal is reached.

Action if target cannot be reached Select what to do if the target is not reached in the time frame set by the Ramp up setting.

-

Stop test run. Stop the test as soon as the time frame has ended.

-

Continue test run without reaching goal. Continue running the test for the duration time setting, even though the goal was not reached.

Duration Set a duration time for the load test to continue running after the time frame set by the Ramp up setting has elapsed.

Note: The total test time is the sum of the Ramp up time and the Duration.

The following do not support the Goal Oriented run mode:

-

Adding Vusers

-

Generating single user performance data

-

The Timeline tab when scheduling a load test

-

Network emulations for cloud locations

-

Changing the load in a running load test

-

TruClient Native Mobile scripts

How LoadRunner Cloud works with goals

LoadRunner Cloud runs Vusers in batches to reach the configured goal value. Each batch has a duration of 2 minutes.

In the first batch, LoadRunner Cloud determines the number of Vusers for each script according to the configured percentages and the minimum and maximum number of Vusers in the goal settings.

After running each batch, LoadRunner Cloud evaluates whether the goal has been reached or not. If the goal has not been reached, LoadRunner Cloud makes a calculation to determine the number of additional Vusers to be added in the next batch.

If the goal has been reached, or there are no remaining Vusers to be added, the ramp up ends. Note that the value can be greater than the defined goal value. LoadRunner Cloud does not remove Vusers to reduce the load.

During run time, if the goal was reached, but subsequently dropped to below the configured value, LoadRunner Cloud tries to add Vusers (if there are any remaining to be added) to reach the goal again.

LoadRunner Cloud does not change think time and pacing configured for scripts.

Configure cloud load generator locations

For each script in the test, you configure a Cloud or On-premises location. This is the location of the load generators that run the script. For details, see Configure a schedule for your script.

Tip: To learn more about the machine types used for cloud load generators, see Cloud machine types.

To configure the settings for cloud locations:

For cloud locations, you configure the distribution of Vusers and the network emulation.

- In the Load distribution pane

, click the Cloud tab.

, click the Cloud tab. -

Click Edit locations and select the locations to add to your test. For details, see Vuser distribution locations.

Tip: Click Group by to group the load generators by location, vendor, vendor region, or geographical area.

- In the Cloud tab, click Edit network emulations and select up to five emulations for your test. For details, see Network emulations.

-

For each location that you selected:

-

Enter the percentage of Vusers you want to distribute to this location. The Vuser distribution must total 100 percent.

-

Enter the percentage of those Vusers distributed to a location for which you want to assign a network emulation.

-

The distribution of locations and network emulations is equally applied to each of the test scripts.

Example

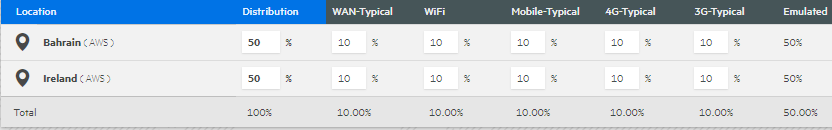

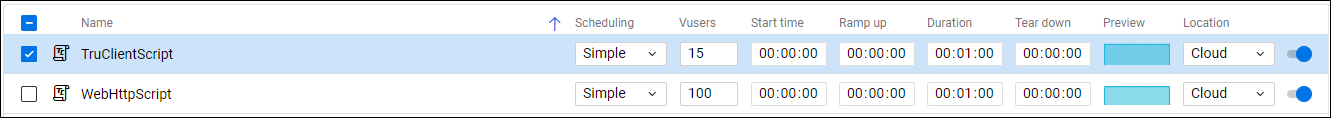

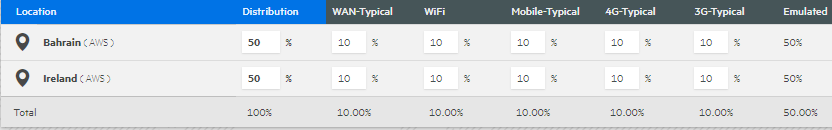

In the following example, in the test's Load profile page, 15 Vusers are deployed for a TruClient script and 100 Vusers for a Web HTTP script.

The location is set to Bahrain for 50 percent of the Vusers, and Ireland for the other 50 percent.

When the test starts in the Bahrain region, 7 Vusers are launched for the TruClient script and 50 Vusers for the Web HTTP script.

In Ireland, the test launches the remaining Vusers: 8 Vusers for the TruClient script and 50 Vusers for the Web HTTP script.

Load distribution templates

Load distribution templates are entities that you can apply to your load tests. You can customize an existing distribution (locations, distribution, and network emulations) and save it as a template for future use within the same project.

To create a new distribution template:

-

In the Load distribution pane

of a load test, click the Cloud tab.

of a load test, click the Cloud tab. -

Configure the locations, distribution, and network emulations you want to save as a template.

-

Click Templates and then click Save as.

-

Enter a name and (optional) description and click Save.

To use an existing template:

-

When creating or editing a load test, select the Load distribution pane

and click the Cloud tab.

and click the Cloud tab. -

Click Templates and then click Template management.

-

From the list of available templates, select the template you want to use and then click Select. The distribution settings from the selected template are added to the load test.

To delete an existing template:

-

In the Load distribution pane

of a load test, click the Cloud tab.

of a load test, click the Cloud tab. -

Click Templates and then click Template management.

-

Click the trash can icon for the template you want to delete and confirm the deletion.

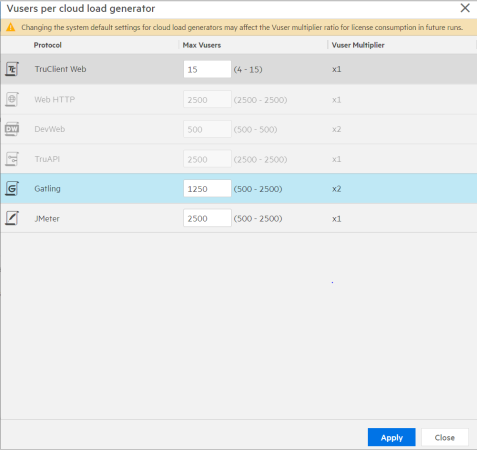

Cloud LG infrastructure capacity

By default, LoadRunner Cloud assigns a maximum number of Vusers for each cloud load generator per protocol. For example, the default configuration for Vusers running Web HTTP scripts is a maximum of 2,500 Vusers per load generator. In this case, if you choose to run 10,000 Web HTTP Vusers in a single location, LoadRunner Cloud provisions four cloud load generators in that location.

The following table lists the default maximum number of Vusers for each protocol:

| Protocol | Maximum number of Vusers |

|---|---|

| Citrix ICA | 25 |

| DevWeb | 1500 |

| .NET | 500 |

| Gatling | 2500 |

| Java | 1500 |

| JMeter | 2500 |

| Kafka | 1500 |

| Mobile HTTP | 2500 |

| MQTT | 2500 |

| ODBC | 100 |

| Oracle NCA | 2500 |

| Oracle - Web | 2500 |

| RDP | 25 |

| RTE | 25 |

| SAP GUI | 25 |

| SAP - Web | 2500 |

| Selenium | 15 |

| Siebel Web | 2500 |

| SP Browser-driven | 15 |

| SP HLS | 500 |

| SP Web Basic | 2500 |

| SP Web Page-Level | 2500 |

| SP Web Services | 2500 |

| TruAPI | 2500 |

| TruClient Mobile Web | 15 |

| TruClient Native Mobile | 15 |

| TruClient Web | 15 |

| Web HTTP | 2500 |

| Web Services | 2500 |

| Windows Sockets | 500 |

In certain instances, you may want to change the default configuration:

-

High CPU or Memory utilization. Lowering the number of Vusers per cloud load generator may help reduce the CPU and memory utilization. For details, see LG alerts.

-

Environment limitations. Some environments do not allow you to load the system with more than a certain amount of Vusers originating from a single IP address. You can circumvent this issue by reducing the number of Vusers per cloud load generator. Another option is to enable the Multiple IPs feature. For details, see Enable multiple IPs for cloud and on-premises load generators.

To customize the configurations per protocol (admins):

-

Make sure you have access to Tenant management. Go to LoadRunner Cloud banner > Settings > Tenant management > Projects.

-

Click a project and go to the Load Generators page.

-

Click the Cloud tab. If no data is visible, you must enable this capability with a service request.

-

Activate or deactivate a protocol for end users by toggling the switch in the Active column.

-

Optionally, modify the maximum number of allowed Vusers for the active protocols.

-

Click Apply. Note any pop ups or information messages indicating how the changes affect your tests.

In the load test's Test settings options, you can set a limit to the number of Vusers that can run on a cloud load generator, per protocol, for each test.

This capability is off by default. To enable it, your admin must submit a service request. After this capability is enabled, your admin can activate or deactivate protocols for each project, and set the lower limit of Vusers per protocol. For details, see Cloud LG infrastructure capacity.

After this feature has been enabled, follow these steps to configure the Vuser limits for your test:

-

Set the Enable Cloud LG Vusers configuration option to Manual in Test settings. For details, see Load generators settings.

-

Click the Edit button

adjacent to the Manual option. The Vusers per cloud generator dialog box opens.

adjacent to the Manual option. The Vusers per cloud generator dialog box opens. -

Specify the maximum number of Vusers that you want to be able to run in the Vusers per cloud load generator dialog box.

-

Click Apply. Note any pop up windows and informational messages describing how your changes affect Vuser multipliers and costs

-

In the Load tests > Load profile pane, click the License button

to learn about your license consumption. If the multiplier was changed due to your changes, the button name indicates 'Multiplied'.

to learn about your license consumption. If the multiplier was changed due to your changes, the button name indicates 'Multiplied'. Potential implications of modifying the default values

The following potential implications may apply when modifying the maximum number of Vusers:

License consumption. License consumption may increase depending on the actual configuration. Pay attention to the pop up windows and information messages issued when you apply your changes.

Dedicated IPs. When using dedicated IPs for cloud load generators, reducing the maximum number of Vusers per cloud load generator increases the number of dedicated IPs required for your future tests.

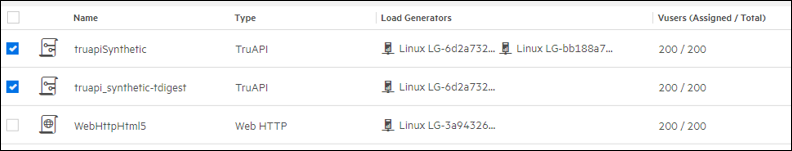

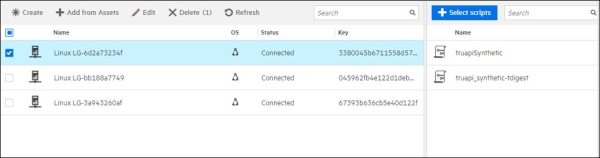

Configure on-premises load generator locations

This section describes how to choose on-premises load generators for your test. For details about script permissions, see Configure a schedule for your script.

To configure the settings for on-premises locations:

- in the Load distribution pane

, click the On-premises tab.

, click the On-premises tab. -

Click Add from Assets to select the load generators for your test, or click Create to add a new load generator. The load generator is added to the grid, showing its name, OS, connectivity status, and access key.

-

Optionally, manage the on-premises load generators in the grid:

-

Click a column header to sort by that value.

-

Click Refresh to check the current connectivity status of the selected on-premises load generator.

-

Click Edit to manage the details of the load generator machine. For details, see Load generator assets.

-

Click Delete to remove the selected load generators. This action removes the on-premises load generators from the test but not from the LoadRunner Cloud assets.

-

-

Optionally, assign scripts and Vusers per load generator through a simple or advanced assignment method. To enable this capability:

-

Choose Enable manual Vuser distribution for on-premises load generators in the Test settings page.

-

Select an assignment method: Simple or Advanced. For details, see Define test settings.

Note:

-

Only scripts that are configured with a Location of On-premises (in the Load profile page) are displayed in the scripts list.

-

If you change the location of a script that is already assigned to a load generator, the script is automatically unassigned from the load generator.

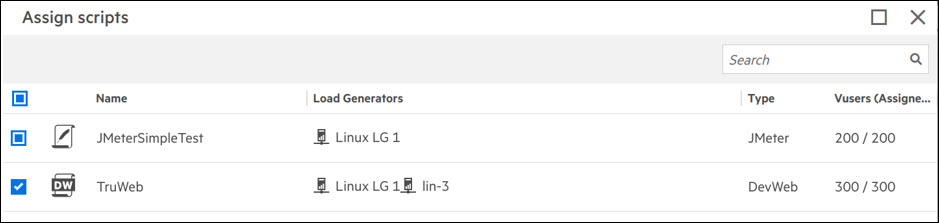

With the Simple assignment method, you indicate which load test scripts run on which on-premises load generators.

In the Load distribution > On-premises page, the Assigned Scripts pane to the right of the load generator list shows the scripts assigned to the selected load generator.

Click + Select scripts to assign or unassign scripts to the selected load generator. To assign a new script, select it and click OK. To unassign a script, cancel its selection and click OK.

Note: When using the Simple assignment method, you can only assign scripts of the same type to a load generator. If a script is already assigned to a selected load generator, only scripts of the same type are enabled for assigning. Scripts of other types are unavailable.

Advanced assignment

When using the Advanced assignment method, you can assign multiple scripts of different types to a load generator. This gives you full control over how Vusers from different protocols are distributed.

You can also set the number of Vusers per script to run on specific on-premises load generators. Before setting the number of Vusers, make sure to assign each script to a load generator, as described in Simple assignment.

After you have assigned the scripts, set the number of Vusers either manually or automatically. For details, see Assign Vusers to on-premises load generators.

Note:

-

If multiple protocols have been assigned to a load generator, and you switch from Advanced to Simple mode, all assignments for that load generator are cleared.

- If advanced scheduling is enabled for on-premises scripts, and the total Vuser ramp up is more than the entitled capacity of the license, advanced assignment will not work.

-

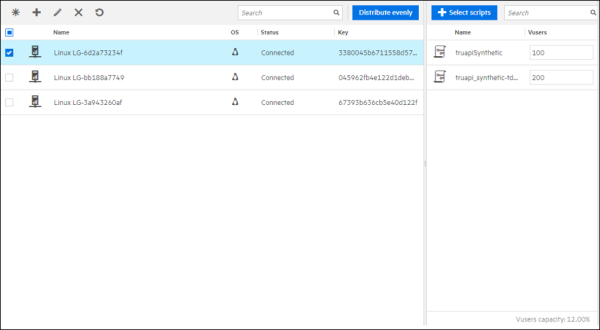

Assign Vusers to on-premises load generators

LoadRunner Cloud lets you assign the number of Vusers per load generator, manually or automatically.

To manually assign the number of Vusers:

-

Navigate to the load test's Load distribution page and open the On-premises tab.

-

Select the relevant load generator.

-

Enter the number of Vusers to assign in the box adjacent to the script name.

Note: Make sure that the number of Vusers that you assign to your script matches the total number of Vusers in your load test. For example, if the script has 500 Vusers, you must manually assign all 500. In addition, the number of Vusers defined in your test must not exceed the maximum number of Vusers defined in your Vuser license.

To automatically assign an equal amount of Vusers to your on-premises load generators:

-

Navigate to the load test's Load distribution page and open the On-premises tab.

-

Click Distribute evenly. LoadRunner Cloud automatically distributes Vusers evenly over all assigned on-premises load generators, according to the script assignment configured in the test.

The following guidelines apply:

-

The even distribution feature only affects Vusers for scripts assigned to the test's on-premises load generators.

-

If a load generator is not limited to a number of Vusers less than its even portion, the even portion is assigned. For example, where both LG-1 and LG-2 are allowed to run 500 Vusers, the distribution is as follows:

Script name # of Vusers LG assignment # of Vusers running after clicking Distribute evenly Script-A 100 LG-1 100 on LG-1 Script-B 200 LG-2 200 on LG-2 Script-C 300 LG-1, LG-2 150 on both LG-1 and LG-2 -

If a load generator is limited to a number of Vusers less than its even portion, the maximum number of Vusers is assigned. For example, if LG-1 is allowed to run 30 Vusers and LG-2 500 Vusers (the even portion is 50;100 Vusers divided by 2 LGs), the distribution is as follows:

Script name # of Vusers LG assignment # of Vusers running after clicking Distribute evenly Script-A 100 LG-1, LG-2 30 on LG-1 and 70 on LG-2 -

When assigning multiple scripts of different types to a load generator, the even distribution feature assigns Vusers as evenly as possible to all OPLGs. In doing so, it also takes the OPLG's total capacity into account.

LG \ Script TruClient-1 (Total 20 Vusers) Web-1 (Total 3000 Vusers) Total Capacity LG-1 0 2500 80.00% (2000/2500) LG-2 10 833 99.99% (10/15 + 833/2500) LG-3 10 167 73.35% (10/15 + 167/2500) In the example above, each LG is limited to a maximum of 15 TruClient Vusers or 2,500 Web Vusers. After assigning 20 TruClient Vusers to LG-2 and LG-3, it is no longer possible to evenly distribute 3,000 Web Vusers to all three LGs, because "10/15 + 1000/2500 ~= 107% on LG-2 and LG-3, which exceeds 100%.

You can manually adjust the distribution after the automatic calculation.

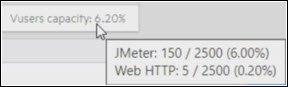

View the number of assigned Vusers

To see the number of Vusers assigned per script, click + Select scripts and refer to the Vusers column.

When assigning multiple scripts of different types to a load generator, hover the mouse over Vusers capacity to display the Vuser capacity and the distribution ratio for each script type:

Configure disruption events

You can include chaos testing in your load test by adding disruption events. To add disruption events, you must first configure your Gremlin account in LoadRunner Cloud. For details on configuring your Gremlin account, see Disruption events.

To add disruption events:

-

In the Load tests tab, select a test and open the Disruption events pane

. Disruption events already assigned to the load test are displayed. You can delete an event, or toggle the Active button to make an event active or inactive.

. Disruption events already assigned to the load test are displayed. You can delete an event, or toggle the Active button to make an event active or inactive. -

To add new disruption events to the load test, click the Add button to open the Add events dialog box. All the available disruption events are listed. You can filter the list by attack type and can search for a specific event.

-

Select the events you want to add to the load test and click Add. The selected events are added to the load test and are displayed in the Disruption events pane.

-

In the Disruption events pane, for each disruption event set the start time (when during the test run the event should start).

Note:

- Disruption events must end before the completion of the load test run. This means that a disruption event's start time + it's duration cannot be greater than the total load test run time.

- The same disruption event cannot run concurrently in a load test. A disruption event must end before another instance of the same event can be started.

Disruption event data can be viewed in the Dashboard. For details, see Dashboard metrics.

Configure rendezvous settings

When performing load testing, you need to emulate heavy user load on your system. To help accomplish this, you can instruct Vusers to perform a task at exactly the same moment using a rendezvous point. When a Vuser arrives at the rendezvous point, it waits until the configured percentage of Vusers participating in the rendezvous arrive. When the designated number of Vusers arrive, they are released.

You can configure the way LoadRunner Cloud handles rendezvous points included in scripts.

If scripts containing rendezvous points are included in a load test, open the Rendezvous pane and configure the following for each relevant rendezvous point:

-

Enable or disable the rendezvous point. If you disable it, it is ignored by LoadRunner Cloud when the script runs. Other configuration options described below are not valid for disabled rendezvous points.

-

Set the percentage of currently running Vusers that must reach the rendezvous point before they can continue with the script.

-

Set the timeout (in seconds) between Vusers for reaching the rendezvous point. This means that if a Vuser does not reach the rendezvous point within the configured timeout (from when the previous Vuser reached the rendezvous point), all the Vusers that have already reached the rendezvous point are released to continue with the script.

Note:

-

The Rendezvous tab is only displayed if a script containing rendezvous points is included in a load test.

-

When you select a rendezvous point in the list, all the scripts in which that rendezvous point is included are displayed on the right (under the configuration settings). If the script is disabled, its name is grayed out.

-

If no rendezvous points are displayed for a script that does contain such points, go to Assets > Scripts and reload the script.

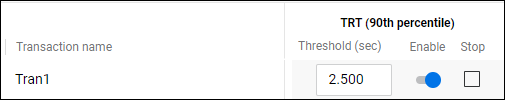

Configure SLAs

Service level agreements (SLAs) are specific goals that you define for your load test run. After a test run, LoadRunner Cloud compares these goals against performance related data that was gathered and stored during the course of the run, and determines whether the SLA passed or failed. Both the Dashboard and Report show the run's compliance with the SLA.

On the SLA page, you set the following goals:

| Goal | Description |

|---|---|

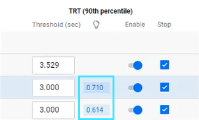

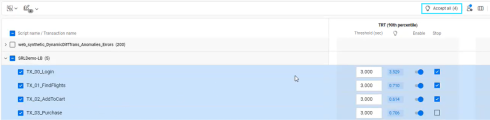

| TRT (nth percentile) |

The expected transaction response time, in seconds, that it takes the specified percent of transactions to complete. By default, the expected TRT is 3 seconds. If the percent of completed transaction exceeds the SLA's value, the SLA is considered "broken". For example, assume you have a transaction named "Login" in your script, where the 90th percentile TRT value is set to 2.50 seconds. If more than 10% of successful transactions that ended during a 5 second window have a response greater than 2.50 seconds, the SLA is considered "broken" and shows a Failed status. For details about how the percentiles are calculated, see Percentiles. Note: The percentile goal for SLAs and all transaction measurements is set in Test settings > Run configurations. This is the percentage of transactions expected to complete successfully. By default, this value is 90%. For details, see Run configurations settings. |

| Failed transactions (%) | By default, the percent of allowed failed transactions is 10%. If the percent of failed transaction exceeds the SLA's value, the SLA is considered "broken" and shows a Fail status. |

You can set a separate SLA for each of your runs. You can also select multiple SLAs at one time and use bulk actions to apply one or more actions to the set.

You configure one of more SLAs (Service Level Agreements) for each load test:

To configure the SLA:

-

In the Load tests tab, choose a test and open the SLA pane

.

. -

By default, all transactions for the selected test are displayed, grouped by script name. If you want to remove the grouping and display transactions by transaction name, click Group by

, and clear the Script name selection.

, and clear the Script name selection. -

Set transaction response time (TRT) settings:

-

Set the expected time it takes the specified percent of transactions to complete. The default TRT goal is 3 seconds.

The percentage of transactions expected to complete successfully is shown by the read only value displayed in the TRT (nth percentile) column header. This value is set in Test settings > Run configurations. For details, see Run configurations settings.

Example: For example, if the TRT percentile is set to

90and the TRT time value to2.500seconds, then if more than 10% of successful transactions that ended during a 5 second interval have a response time higher than 2.50 seconds, an SLA warning is recorded (using the average over time algorithm). For details, see Percentiles. -

If you have run your test more than once, LoadRunner Cloud displays a suggested TRT goal value.

-

Select the Stop check box to stop the test if the SLA is broken.

-

If you do not want the TRT (percentile) goal to be used during the test run, turn off the Enable switch adjacent to the TRT (nth percentile) column.

Note: For tenants created from January 2021 (version 2021.01), SLAs are inactive by default. You can enable them manually or use Configure settings for multiple transactions

to enable SLAs for multiple scripts. To change this behavior and have all SLAs enabled by default, submit a service request.

to enable SLAs for multiple scripts. To change this behavior and have all SLAs enabled by default, submit a service request.

-

-

Set failed transaction settings:

-

If you want the Failed transactions (%) goal value to be used during the test run, turn on the Enable switch.

-

Set the Failed transactions (%) goal value. The default goal value is 10%. If the number of transaction failures exceeds this value, the SLA is assigned a status of Failed.

-

-

If you are not using the TRT (nth percentile) or Failed transactions (%) goal, you can hide the goal's column in the grid. To do so, click Select columns

, turn off the switch of the unused goal, and then click Hide.

, turn off the switch of the unused goal, and then click Hide.Note: Hiding a column turns off the Enable switch for all transactions. If you later show the column, you need to turn on the Enable switch for all the transactions that you want to use (the setting values are retained).

-

If you want your SLAs to be dependent on Vuser success, click Vuser failure

, and turn on the Set test status to Failed if one or more Vusers fail switch. The icon changes to

, and turn on the Set test status to Failed if one or more Vusers fail switch. The icon changes to  when this option is on. If even one Vuser fails, it gives the test a Failed status.

when this option is on. If even one Vuser fails, it gives the test a Failed status. -

You can make bulk updates to your SLA settings.

-

Select multiple SLAs at one time, and then click Configure settings for multiple transactions

. The number next to the icon indicates the number of SLAs selected.

. The number next to the icon indicates the number of SLAs selected. -

Select the check box of each field that you want to update.

-

Apply one or more settings to the selection by entering a setting value or changing the Enable switch (On/Off) settings.

Tip: You can save values without updating them by clearing the check box.

-

Click Apply to update the SLA settings.

-

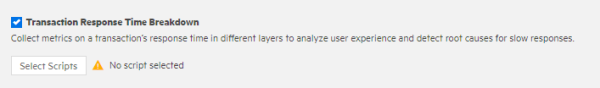

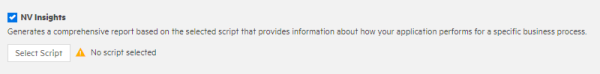

Generate single user performance data

When enabled, this feature lets you create client side breakdown data to analyze user experience for a specific business process.

Note: You cannot generate single user performance data for:

-

Load tests configured to the Iterations run mode.

-

Scripts configured to run on on-premises load generators.

Select one or more of the following report types:

| Report type | How to |

|---|---|

| NV Insights |

Generate a comprehensive report based on the selected script that provides information about how your application performs for a specific business process. Generate an NV Insights report:

Note:

For details, see The NV Insights report. |

| TRT Breakdown |

Generate TRT breakdown data:

For details, see Transaction response time breakdown data. |

Set a load test as a favorite

When you have configured a load test and it appears in the list of tests in the Load tests tab, you can set the test as a favorite.

To set a load test as a favorite

To set a load test as a favorite, do the following:

-

In the Load tests tab, hover over the load test you want to set as a favorite. A hollow yellow star

appears.

appears. -

Click the hollow yellow star and it changes to a filled yellow star

. This indicates that the load test has been added to your favorites.

. This indicates that the load test has been added to your favorites.

To view and select favorites

To view a list of your favorite load tests and to select a favorite, do the following:

-

In the LoadRunner Cloud banner, click the favorites

icon. A list of your favorite load tests is displayed.

icon. A list of your favorite load tests is displayed. -

Click a load test in the list to open it for editing in the Load tests tab.

To remove a load test from favorites

To remove a load test from your list of favorites, use one of the following options:

-

In the LoadRunner Cloud banner, click the favorites

icon. A list of your favorite load tests is displayed. Place the cursor over the test you want to remove and click the trash can icon next to it.

icon. A list of your favorite load tests is displayed. Place the cursor over the test you want to remove and click the trash can icon next to it. -

In the Load tests tab, click the yellow star

of the load test you want to remove from your favorites. The yellow star disappears, indicating that the load test has been removed from your favorites.

of the load test you want to remove from your favorites. The yellow star disappears, indicating that the load test has been removed from your favorites.

See also:

See also:

, select the Breakdown widget.

, select the Breakdown widget.