Deployment

This section describes high-level options for hardware deployment with StarTeam. Because StarTeam can be used by small teams, enterprise-scale organizations, and everything in between, there are many options for deploying its components that impact performance, scalability, fail-over, and other factors such as minimum hardware requirements, high availability options, and options for distributed teams.

Performance and scalability factors

StarTeam is a rich application that can be used in a variety of ways. However, this flexibility makes it difficult to predict exactly what hardware configuration is perfect for your organization. Here are the major factors that affect the performance and scalability of a StarTeam configuration:

| Repository Size | The number of views and items affect the StarTeam Server process’s memory usage, database query traffic, and other resource factors more than any other type of data. Other kinds of data such as users, groups, queries, and filters have a lesser effect on resource demand. Simply put, as the repository gets bigger, more demand is placed on server caching and database queries. |

| Concurrent Users |

The number of concurrent users during peak periods has a significant affect on a server. Each concurrent user requires a session, which maintains state, generates commands that utilize worker threads, incurs locking, and so forth. The number of defined users is not nearly as important as the number concurrent users during peak periods. If you use a single metric for capacity planning, use concurrent users. It boosts server scalability, so whether or not you deploy it and whether or not clients enable it will affect scalability. MPX Cache Agents not only significantly boost check-out performance for remote users, but they also remove significant traffic from the server. In short, deploying will bolster your configuration’s scalability. |

| Bulk Applications | On-line users that utilize a graphical client typically incur low demand on the server. In contrast, bulk applications such as “extractors” for Datamart or Search and “synchronizers” for integrations such as Caliber or StarTeam Quality Center Synchronizer tend to send continuous streams of commands for long durations. A single bulk application can generate demand comparable to 10-20 on-line users. |

| Application Complexity | Due to its customizability, StarTeam allows you to build sophisticated custom forms, add lots of custom fields to artifact types, create custom reports, and so forth. The more sophisticated your usage becomes, the more commands will be generated and the bigger artifacts will get, both of which increase demand. |

Consider these factors when deciding the size of your configuration. Because of the unique factors that define your environment, take these deployment suggestions as guidelines only.

Configuration size

There are no hard rules about what makes a StarTeam configuration small, medium, or large. However, for our purposes, we’ll use these definitions based on concurrent users:

| Small configuration | < 50 concurrent users |

| Medium configuration | < 200 concurrent users |

| Large configuration | > 200 concurrent users or more |

The concurrent user count, rather than data volume or type of users, seems to be the best metric for judging configuration size for purposes of deployment planning. In our experience, the amount of data managed by a StarTeam configuration (particularly items) tends to grow proportionally with the number of projects and views, which grow in proportion to the team size. Moreover, the ratio of online users to bulk applications tends to be roughly the same across organization sizes.

So how big can a configuration get? To date, we’ve seen single StarTeam instances with over 500 concurrent users, over 10,000 total “defined” users, over 4,000 views, tens of millions of items, and up to a terabyte of vault data. With continuous hardware advances and software improvements, these limits get pushed every year.

Note: Not all of these limits have been reached by the same configuration. Although some customers have 4,000 views, not all are actively used. A customer with 10,000 total users typically sees 250-300 concurrent users during peak periods. Interestingly, however, the amount of data managed by the vault seems to have little effect on performance or scalability.

The factors to consider as a configuration size increases are:

| Start-up Time | The StarTeam Server process performs certain maintenance tasks when it starts such as purging aged audit and security records in the database. As the amount of activity and time-between-restarts increases, these tasks increase the start-up time. Also, start-up time is affected by the number of unique “share trees” due to initial caches built at start-up time. With well-tuned options, even a large server can start in a few minutes, but it can also take up to 15 minutes or more. |

| Memory Usage | The

StarTeam Server process’s memory usage is affected by several factors such as the total number of items, the server caching option settings,

the number of active sessions (concurrent users), the number of active views, and the number of command threads required.

Caching options can be used to manage memory usage to a point, but sessions, active views, and other run-time factors dictate

a certain amount of memory usage. On a 32-bit

Microsoft Windows platform, the

StarTeam Server process is limited to 2 GB of virtual memory. If you enable 4 GT RAM Tuning, which boosts the virtual memory limit of a single

process on a 32-bit system, this limit can be pushed closer to 3 GB. Running 32-bit on 64-bit operating system allows the

process to grow up to 4 GB. Running Native 64-bit removes memory restrictions and you are constrained by the physical memory

available on the server.

Tip: It is highly recommended to use the 64-bit version of the StarTeam Server for better performance and scalability. |

| Command Size | Some client requests return a variable response size based on the number of items requested, the number of users or groups defined, the number of labels owned by a view, and so forth. Large server configurations can cause certain commands to return large responses, which take longer to transfer, especially on slower networks. Clients will see this as reduced performance for certain operations such as opening a project or a custom form. |

Multiple configurations on the same server

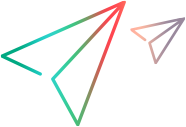

For small- to medium-sized server configurations, you can place all StarTeam Server components on a single machine. Furthermore, you can also deploy all components for multiple configurations on the same machine depending on the sum of concurrent users of all configurations. The diagram below shows both basic and components deployed.

You should use a single machine for all StarTeam Server components only when the total number of concurrent users for all configurations does not exceed 100. Even though a single configuration can support more than 100 users, each configuration has a certain amount of overhead. Consequently, we recommend that when the total peak concurrent user count reaches 100, it’s time to move at least one configuration to its own machine.

With a single machine, all StarTeam Server processes, the root Message Broker, root MPX Cache Agents, and the database server process execute on one machine. Here are some rules of thumb for this layout:

- Start with 2 cores and 2 GB of memory for the database server process.

- Add 2 cores and 2 GB of memory per StarTeam configuration.

- If you use locally-attached disk for each StarTeam configuration’s vault and database partitions, use separate, fast drives to improve concurrency. Also, the disks should be mirrored to prevent a single point of failure.

- If you deploy , all StarTeam configurations can share a single root MPX Message Broker. Though not shown, one or more remote Message Brokers may be connected to the root Message Broker.

- If you deploy MPX Cache Agents, each configuration needs its own root MPX Cache Agent, which can share the root Message Broker. Though not shown, one or more remote MPX Cache Agents may be connected to each root MPX Cache Agent.

- Be sure to configure each StarTeam Server, Message Broker, and root MPX Cache Agent process to accept TCP/IP connections on a different port.

Using these guidelines, you can deploy three to four small StarTeam configurations on one machine, only if the total number of concurrent users doesn’t peak above 100 or so. Otherwise, the various processes could begin to compete for resources (CPU, memory, disk I/O, and/or network bandwidth), adversely affecting responsiveness. Also, if you start out with the single-server configuration, don’t forget to plan on moving components to their own machines when demand grows over time.

Caution: The disadvantage of deploying multiple configurations on a single machine is that they are all affected when the machine must be upgraded, patches need to be installed, someone kicks the power plug, and so forth.

Medium configurations

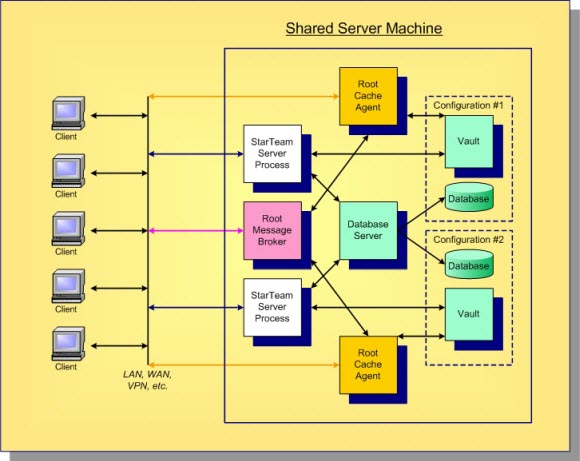

As your configuration size grows beyond what could be called a small configuration, the first thing to move to its own machine is the database process. When you move the database process to its own machine, install a high-speed dedicated link between the StarTeam Server and database machines. Trace route between the StarTeam Server and the database machine should ideally be one hop.

Separate database machine

Using a separate machine for the database server, multiple StarTeam Server processes and MPX components can still be deployed on the same shared server machine. Because the database processing is offloaded to another machine, the total number of current users can be higher, up to 200-300 or so. A shared database server is shown below.

In this diagram, a locally-attached disk is assumed for the server and database machines.

Storage server

With multiple configurations, you have multiple vaults and databases, possibly on separate disks. As you consider backup procedures, mirroring for high availability, and other administrative factors, you may find it more cost-effective to place all persistent data on a shared disk server (SAN or NFS), as shown below.

Using a shared storage server for all configuration vaults and databases has several advantages. Depending on the storage system, all important data can be backed-up with a single procedure. Hardware to support mirroring or other RAID configurations can be concentrated in a single place. Many storage systems allow additional disks to be added dynamically or failed disks to be hot-swapped.

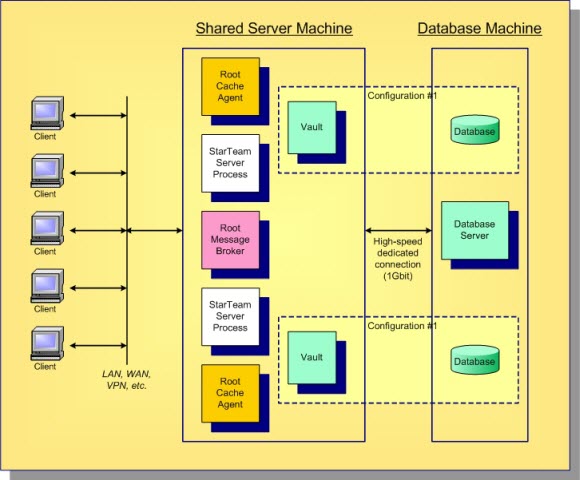

Large configurations

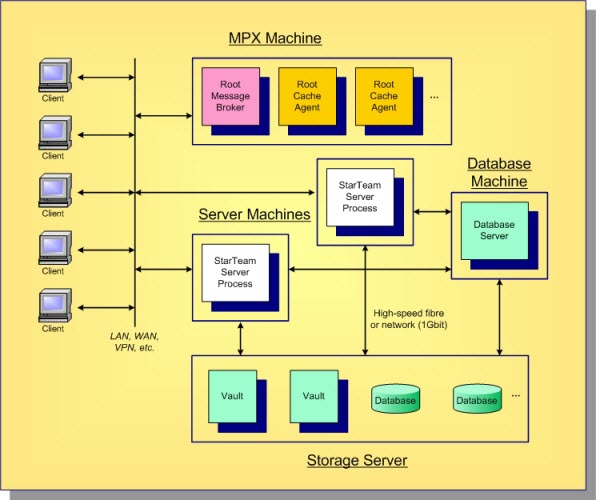

We consider a large configuration to be one that supports 200 concurrent users or more during peak periods. For these configurations, place the StarTeam Server process on its own system. The database process should also execute on its own machine. Though not strictly necessary, the root MPX Message Broker and MPX Cache Agent processes can also benefit by executing on yet another machine. Especially when concurrent users rise to 200, 300, or more, moving the processes to their own machine can remove network traffic and other resource contention from the StarTeam Server machine. A typical deployment of multiple large configurations is shown below.

The key points of this multiple, large configuration deployment are:

- The StarTeam Server process for each configuration executes on its own machine. This is typically a high-end machine with a multi-core CPU and at least 16 GB of memory running on a 64-bit OS. If you have more than 100 concurrent users we recommend you use a 64-bit version of the StarTeam Server.

- The database server executes on its own machine. Multiple StarTeam configurations can share the same database server. (Up to eight configurations has been set to use the same database server without a performance issue.) Each StarTeam configuration uses its own “schema instance”. Each StarTeam server machine should have a high-speed dedicated connection to the database machine.

- The root MPX Message Broker and root MPX Cache Agents can all execute on a single machine. Each root MPX Cache Agent requires access to the appropriate vault, but a high-speed dedicated connection is not necessary. File access over the network (for example, using UNC paths) is sufficient. If you utilize the StarTeam Notification Agent, you can put it on the machine as well.

- A shared storage server such as a SAN server can be used for all StarTeam vaults and database partitions. Depending on the hardware, an interface (for example, “host” card) may be needed for each StarTeam server machine in order to access the SAN.

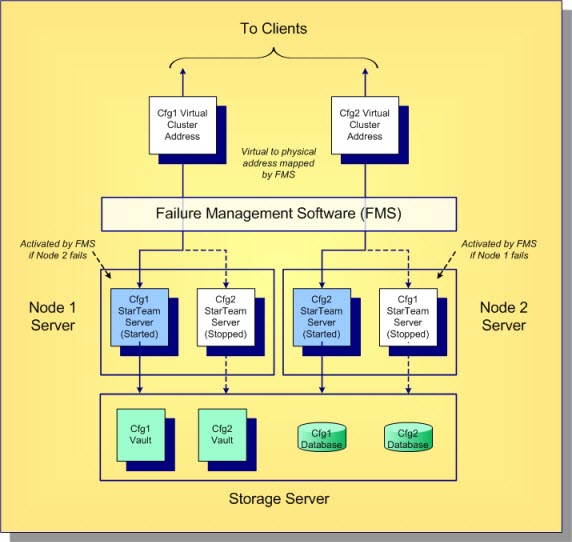

Active/passive clustering

StarTeam works with active/passive clustering, in which a “warm standby” node is maintained for quick fail-over. One general rule to remember is that only one StarTeam Server process can be active for a given configuration at one time. However, StarTeam configuration files can be copied to multiple machines along with all the necessary software. Also, multiple machines under the control of Failure Management Software (FMS) can be connected to the same database (which may be clustered itself), and they can be connected to the same shared storage server for vault access.

Active/passive clustering works like this: the StarTeam Server process on one node in the cluster is started, making it the active node for that configuration. The IP address of the active node is mapped to a virtual “cluster address”, which is the address to which clients connect. If the active node fails, the FMS takes care of fail-over: it starts the StarTeam Server process on a passive machine, making it the active node, and remaps the cluster address to the new active node’s IP address. Running clients receive a disconnect message and have to reconnect, but in most cases the fail-over will occur quickly, so clients can immediately reconnect.

When you have multiple StarTeam configurations, you can “pair” machines so that an active node for one configuration is the passive node for a second configuration and vice versa. Hence, both machines are actively used, and only in a fail-over scenario one machine must support the processing of both configurations. An example of active/passive cluster configuration is shown below.

In this example, the StarTeam configurations Cfg1 and Cfg2 are “paired”, hence one node is active and one node is passive for each one. (The database process is not shown – it might also be deployed on a cluster.)