Run tests in parallel using a command line

Use a command line to trigger the ParallelRunner tool to run multiple tests in parallel.

Run tests in parallel

The ParallelRunner tool is located in the <Installdir>/bin directory. Use a command line to trigger the tool for parallel testing.

To run the tests in parallel:

-

Close OpenText Functional Testing. ParallelRunner and OpenText Functional Testing cannot run at the same time.

-

Run your tests in parallel from the command line interface by configuring your test run using one of the following methods:

-

Manually create a .json file to define test run information

If you use a .json file, you can synchronize and control your parallel run by creating dependencies between test runs and controlling the start time of the runs. For details, see Use conditions to synchronize your parallel run.

Tests run using ParallelRunner are run in Fast mode. For details, see Fast mode.

For more information, see:

Define test run information using a command line

Relevant for GUI Web and Mobile tests and API tests

When running tests from the command line, run each test separately with individual commands.

For GUI tests, each command runs the test multiple times if you provide different alternatives to run. You can provide alternatives for the following:

- Browsers

- Devices

- Data tables

- Test parameters

To run tests from the command line, use the syntax defined in the sections below. Use semicolons (;) to separate the alternatives.

Parallel runner runs the test as many times as necessary, to test all combinations of the alternatives you provide.

For example:

Run the test three times, one for each browser:

ParallelRunner -t "C:\GUIWebTest" -b "Chrome;IE;Firefox"

Run the test four times, one for each data table on each browser:

ParallelRunner -t "C:\GUIWebTest" -b "Chrome;Firefox" -dt ".\Datatable1.xlsx;.\Datatable2.xlsx"

Run the test 8 times, testing on each browser, using each data table with each set of test parameters.

ParallelRunner -t "C:\GUIWebTest" -b "Chrome;Firefox" -dt ".\Datatable1.xlsx;.\Datatable2.xlsx" -tp "param1:value1, param2:value2; param1:value3, param2:value4"

Tip: Alternatively, reference a .json file with multiple tests configured in a single file. In this file, separately configure each test run. For details, see Manually create a .json file to define test run information.

| Option | Description |

|---|---|

| Web test command line syntax |

From the command line, run each web test using the following syntax:

For example:

If you specify multiple browsers, the test is run multiple times, one run on each browser. |

| Mobile test command line syntax |

From the command line, run each mobile test using the following syntax:

For example: ParallelRunner -t "C:\GUITest_Demo" -d "deviceID:HT69K0201987;manufacturer:samsung,osType:Android,osVersion:>6.0;manufacturer:motorola,model:Nexus 6" -r "C:\parallelexecution"

If you specify multiple devices, the test is run multiple times, one run on each device. Wait for an available device You can instruct the ParallelRunner to wait for an appropriate device to become available instead of failing a test run immediately if no device is available. To do this, add the |

| API test command line syntax |

From the command line, run each API test using the following syntax:

For example:

|

Use the following options when running tests directly from the command line.

-

Option names are not case sensitive.

-

Use a dash (

-) followed by the option abbreviation, or a double-dash (--) followed by the option's full name.

| Command line option | Description |

|---|---|

|

|

String. Required. Defines the path to the folder containing the test you want to run. If your path contains spaces, you must surround the path with double quotes (""). For example: -t ".\GUITest Demo" Multiple tests are not supported in the same path. |

|

--browsers |

String. Optional. Supported for web tests. Define multiple browsers using semicolons (;). ParallelRunner runs the test multiple times, to test on all specified browsers. Supported values include: Chrome, Chrome_Headless, ChromiumEdge, Edge, Firefox, Firefox64, IE, IE64, Safari Notes:

|

|

|

String. Optional. Supported for mobile tests. Define multiple devices using semicolons (;). ParallelRunner runs the test multiple times, to test on all specified devices. Define devices using a specific device ID, or find devices by capabilities, using the following attributes in this string:

Note: Do not add extra spaces around the commas, colons, or semicolons in this string. We recommend always surrounding the string in double quotes ("") to support any special characters. For example: –d "manufacturer:apple,osType:iOS,osVersion:>=11.0" |

|

|

String. Optional. Supported for web and mobile tests. Defines the path to the data table you want to use for this test. The table you provide is used instead of the data table saved with the test. Define multiple data tables using semicolons (;). ParallelRunner runs the test multiple times, to test with all specified data tables. If your path contains spaces, you must surround the path with double quotes (""). For example: -dt ".\Data Tables\Datatable1.xlsx;.\Data Tables\Datatable2.xlsx". |

-o

|

String. Optional. Determines the result output shown in the console during and after the test run. Supported values include:

|

|

|

String. Optional. Defines the path where you want to save your test run results. By default, run results are saved at %Temp%\TempResults. If your string contains spaces, you must surround the string with double quotes (""). For example: - r "%Temp%\Temp Results" |

-rl

|

Optional. Include in your command to automatically open the run results when the test run starts. Use the -rr--report-auto-refresh option to determine how often it's refreshed. For more details, see Sample ParallelRunner response and run results. |

-rn

|

String. Optional. Defines the report name, which is displayed in the browser tab when you open the parallel run's summary report. By default, the name is Parallel Report. Customize this name to help distinguish between runs when you open multiple report at once. A timestamp is also added to each report name for this purpose. If your string contains spaces, you must surround the string with double quotes (""). For example: - rn "My Report Title" |

-rr

|

Optional. Determines how often an open run results file is automatically refreshed, in seconds. For example: -rr 5 Default, if no integer is presented: 5 seconds. |

|

--testParameters

|

String. Optional. Supported for web and mobile tests. Defines the values to use for the test parameters. Provide a comma-separated list of parameter name-value pairs. For test parameters that you do not specify, default values defined in the test itself are used. For example: -tp "param1:value1,param2:value2" Define multiple test parameter sets using semicolons (;). ParallelRunner runs the test multiple times, to test with all specified test parameter sets. For example: -tp "param1:value1, param2:value2; param1:value3, param2:value4" If your parameter value includes commas (,), semicolons (;), or colons (:), surround the value with a backslash followed by a double quote (\"). For example, if the value of TimeParam is 10:00;00, use: -tp "TimeParam:\"10:00;00\"" Note: You cannot include double quotes (") inside a parameter value using the command line options. Instead, use a JSON configuration file for your ParallelRunner command. |

-we

|

Optional. Supported for mobile tests. Include in your command to instruct each mobile test run to wait for an appropriate device to become available instead of failing immediately if no device is available. |

Manually create a .json file to define test run information

Relevant for GUI Web, Mobile, and Java and API tests

Manually create a .json file to specify multiple tests to run in parallel and define test information for each test run. Then reference the .json file directly from the command line.

To create a .json file and start parallel testing with the file:

-

In a .json file, add separate lines for each test run. Use the .json options to describe the environment, data tables, and parameters to use for each run.

Tip: You can use the ParallelRunner UI tool to automatically create a .json file. For details, see Configure your parallel test run.

-

For GUI mobile testing, you can include the OpenText Functional Testing Lab connection settings in the .json file together with the test information. For how to define the connection settings, see Sample .json file.

The .json file takes precedence over the connection settings defined in Tools > Options > GUI Testing > Mobile in OpenText Functional Testing.

-

Reference the .json file in the command line using the following command line option:

Use the following syntax to start parallel testing:

For example:

ParallelRunner -c parallelexecution.json

-

Add additional command line options to configure your run results. For details, see -o, -rl and -rr.

These are the only command line options you can use together with a .json configuration file. Any other command line options are ignored.

Refer to the following sample file. In this file, the last test is run on both a local desktop browser and a mobile device. See also Sample .json configuration file with synchronization.

Sample .json file

{

"reportPath": "C:\\parallelexecution",

"reportName":"My Report Title",

"waitForEnvironment":true,

"parallelRuns": [

{

"test": "C:\\Users\\qtp\\Documents\\Functional Testing",

"dataTable": "C:\\Data Tables\\Datatable1.xls",

"testParameter":{

"para1":"val1",

"para2":"val2"

}

"reportSuffix": "Samsung_OS_above_6",

"env": {

"mobile": {

"device": {

"manufacturer": "samsung",

"osVersion": ">6.0",

"osType": "Android"

}

}

}

},

{

"test": "C:\\Users\\qtp\\Documents\\Functional Testing",

"reportSuffix": "ID_HT69K0201987",

"env": {

"mobile": {

"device": {

"deviceID": "HT69K0201987"

}

}

}

},

{

"test": "C:\\Users\\qtp\\Documents\\API Test",

"reportSuffix": "API"

} ,

{

"test": "C:\\Users\\qtp\\Documents\\Functional Testing",

"dataTable": "C:\\Data Tables\\Datatable3.xls",

"reportSuffix": "Mobile_Web",

"env": {

"web": {

"browser": "Chrome"

},

"mobile": {

"device": {

"manufacturer": "motorola",

"model": "Nexus 6",

"osVersion": "7.0",

"osType": "Android"

}

}

}

}

]

"settings": {

"mc": {

"Protocol": "http",

"Hostname": "xxxxx",

"Port": 8080,

"Pathname": null,

"AuthType": 1,

"Account": "default",

"Username": "xxxxx",

"Password": "xxxxx",

"ClientId": "xxxxx",

"SecretKey": "xxxxx",

"TenantId": "xxxxx",

"WorkspaceId": null,

"Proxy": null

}

}

}Option reference for creating a .json file

Define the following options (case sensitive) in a .json file:

| Option | Description |

|---|---|

| reportPath |

String. Defines the path where you want to save your test run results. By default, run results are saved at %Temp%\TempResults. |

| reportName |

String. Defines the report name, which is displayed in the browser tab when you open the parallel run's summary report. By default, the report name is the same as the .json file name. Customize this name to help distinguish between runs when you open multiple report at once. A timestamp is also added to each report name for this purpose. |

| test |

String. Defines the path to the folder containing the test you want to run. |

| reportSuffix |

String. Defines a suffix to append to the end of your run results file name. For example, you may want to add a suffix that indicates the environment on which the test ran. Example: Default: "[N]". This indicates the index number of the current result instance, if there are multiple results for the same test in the same parallel run. Notes:

|

| browser |

String. Defines the browser to use when running the web test. Supported values include: Chrome, Chrome Headless, ChromiumEdge, Edge, IE, IE64, Firefox, Firefox64, Safari Notes:

|

| deviceID |

String. Defines a device ID for a specific device in OpenText Functional Testing Lab. Note: When both deviceID and device capability attributes are provided, the device capability attributes will be ignored. |

| manufacturer |

String. Defines a device manufacturer. |

| model |

String. Defines a device model. |

| osVersion |

String. Defines a device operating system version. One of the following:

|

| osType |

Defines a device operating system. One of the following:

|

|

String. Supported for GUI Web, Mobile, and Java tests. Defines the path to the data table you want to use for this test run. The table you provide is used instead of the data table saved with the test. Place the dataTable definition in the section that describes the relevant test run. |

|

| testParameter |

String. Supported for GUI Web, Mobile, and Java tests. Defines the values to use for the test parameters in this test run. Provide a comma-separated list of parameter name-value pairs. For example: "testParameter":{

"para1":"val1",

"para2":"val2"

}

Place the testParameter definition in the section that describes the relevant test run. If your parameter value includes a backslash (\) or double quote ("), add a backslash before the character. For example: "para1":"val\"1" assigns the value val"1 to the parameter para1. For test parameters that you do not specify, the default values defined in the test itself are used. |

| waitForEnvironment |

Set this option to |

| Hostname / Port |

The IP address and port number of your OpenText Functional Testing Lab server. Default port: 8080 |

| AuthType |

The authentication mode to use for connecting to OpenText Functional Testing Lab. Available values:

|

| Username / Password | The login credentials for the OpenText Functional Testing Lab server. |

|

ClientId / SecretKey / TenantId |

The access keys received from OpenText Functional Testing Lab. |

Use conditions to synchronize your parallel run

To synchronize and control your parallel run, you can create dependencies between test runs and control the start time of the runs. (Supported only when using a .json file in the ParallelRunner command).

Using conditions, a test run can do one or more of the following:

- Wait for a number of seconds before beginning to run.

- Run after a different test runs.

- Begin only after a different test runs and reaches a specific status.

For each test run defined in the .json file, create conditions that control when the run starts. Build the conditions under the test section, using the elements described below.

You can create the following types of conditions:

- Simple conditions, which contain one or more wait statements.

- Combined conditions, which contain multiple conditions and an AND/OR operator, which specifies whether all conditions must be met.

Sample .json configuration file with synchronization

The following is a sample .json configuration file that provides a synchronized parallel run:

{

"reportPath": "C:\\parallelexecution",

"reportName": "My Report Title",

"waitForEnvironment": true,

"parallelRuns": [{

"test": "C:\\Users\\qtp\\Documents\\Functional Testing\\GUITest_Mobile",

"testRunName": "1",

"condition": {

"operator": "AND",

"conditions": [{

"waitSeconds": 5

}, {

"operator": "OR",

"conditions": [{

"waitSeconds": 10,

"waitForTestRun": "2"

}, {

"waitForTestRun": "3",

"waitSeconds": 10,

"waitForRunStatuses": ["failed", "warning", "canceled"]

}

]

}

]

},

"env": {

"mobile": {

"device": {

"osType": "IOS"

}

}

}

}, {

"test": "C:\\Users\\qtp\\Documents\\Functional Testing\\GUITest_Mobile",

"testRunName": "2",

"condition": {

"waitForTestRun": "3",

"waitSeconds": 10

},

"env": {

"mobile": {

"device": {

"osType": "IOS"

}

}

}

}, {

"test": "C:\\Users\\qtp\\Documents\\Functional Testing\\GUITest_Mobile",

"testRunName": "3",

"env": {

"mobile": {

"device": {

"deviceID": "63010fd84e136ce154ce466d79a2ebd357aa5ce2"

}

}

}

}]

}When running ParallelRunner with this .json file, the following happens:

-

Test run 3 waits for device 63010fd84e136ce154ce466d79a2ebd357aa5ce2.

-

Test run 2 waits 10 seconds after test run 3 ends, and then waits for an available IOS device.

-

Test run 1 first waits for 5 seconds after the ParallelRunner command is run.

Then it waits 10 seconds more after run 2 ends or run 3 reaches a status of failed, warning, or canceled.

Then it waits until an iOS device is available.

Stop the parallel execution

To quit the test runs from the command line, press CTRL+C on the keyboard.

-

Any test with a status of Running or Pending, indicating that it has not yet finished, will stop immediately.

-

No run results are generated for these canceled tests.

Sample ParallelRunner response and run results

The following code shows a sample response displayed in the command line after running tests using ParallelRunner.

>ParallelRunner -t ".\GUITest_Demo" -d "manufacturer:samsung,osType:Android,osVersion:Any;deviceID:N2F4C15923006550" -r "C:\parallelexecution" -rn "My Report Name"

Report Folder: C:\parallelexecution\Res1

ProcessId, Test, Report, Status

3328, GUITest_Demo, GUITest_Demo_[1], Running -

3348, GUITest_Demo, GUITest_Demo_[2], Pass

In this example, when the run is complete, the following result files are saved in the C:\parallelexecution\Res1 directory:

- GUITest_Demo_[1]

- GUITest_Demo_[2]

The parallelrun_results.html file shows results for all tests run in parallel. The report name in the browser tab is My Report Name.

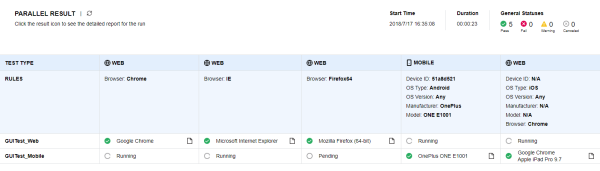

The first row in the table indicates any rules defined by the ParallelRunner commands, and subsequent rows indicate the result and the actual environment used during the test.

Tip: For each test run, click the HTML report  icon to open the specific run results. This is supported only when OpenText Functional Testing is configured to generate HTML run results.

icon to open the specific run results. This is supported only when OpenText Functional Testing is configured to generate HTML run results.

For example:

Note: The report provides a separate column for each browser name, using a case-sensitive comparison for browser names. Therefore, separate columns would be provided for IE and ie.

See also:

See also: