Improve AI-based test object identification

Supported for web and mobile testing

This topic discusses some of the elements that OpenText Functional Testing for Developers's Artificial Intelligence (AI) Features use to support unique identification of objects in your application.

In this topic:

- Associating text with objects

- Text recognition customization

- Identifying objects by relative location

- Describe a control using an image

- Automatic scrolling

Associating text with objects

The text associated with an object can help identify an object uniquely. For example:

browser.describe(AiObject.class, new AiObjectDescription(com.hp.lft.sdk.ai.AiTypes.BUTTON, "ADD TO CART")).Click

Using the ADD TO CART text in this step to describe the object, ensures that we click the correct button.

When detecting objects in an application, if there are multiple labels around the field, the one that seems most logical is used for the object's identification.

However, if you decide to use a different label in your object description, the object is still identified.

Example: When detecting objects in an application, a button is associated with the text on the button, but a field is associated with its label, as opposed to its content.

If a field has multiple labels, one is chosen by default for identification. However, this field is still identified correctly if you use a different label to describe it in a test step.

In some cases, multiple text strings that are close to each other are combined into one text string and used to identify one object.

You can edit the combined string and leave just one to use for object identification. Make sure to remove a whole string and not part of it.

Example:

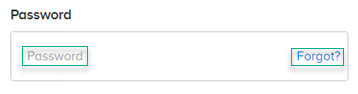

For the text box below, AI Object Inspection combines Password and Forgot? into one string to identify the object and uses the string "Password Forgot?" to describe the object in the code it generates.

You can remove a whole string from your script and change the code to use just "Password" for the description without causing test failure.

Text recognition customization

By default, AI-based steps use different text recognition settings than property-based steps such as GetVisibleText and GetTextLocations. This includes the OCR engine used and the languages in which text is recognized.

Text recognition in multiple languages

By default, AI-based steps recognize text in English only. To recognize text in other languages, select the relevant OCR languages in the AI tab of the OpenText Functional Testing for Developers runtime engine settings. For details, see AI Object Detection Settings.

You can also customize the languages temporarily within a test run by adding AI run settings steps to your test. For details, see the AIRunSettings Class in the Java, .NET, and JavaScript SDK references.

For example:

// Configure OCR settings to enable detection for English, German, French, Hebrew and Traditional Chinese

AiRunSettings.updateOCRSettings((new AiOCRSettings()).setLanguages(new String[]{"en", "de", "fr", "he", "zht"}))Or, you can create a set with your language list and use it to assign the language setting:

// Configure OCR settings to enable detection for English and Russian

Set<String> languages = new HashSet<>();

languages.add(AiOCRLanguages.RUSSIAN);

languages.add(AiOCRLanguages.ENGLISH);

AiRunSettings.updateOCRSettings(new AiOCRSettings().setLanguages(languages));Switch OCR settings for AI-based steps

In an AI-based Web test, you can now include AI run settings steps that switch the OCR settings in the current test run:

AI. AI-based steps use the AI OCR engine and the OCR languages you configured in the AI settings. This is the default setting.

FT. AI-based steps use the same OCR engine and languages used for text recognition in property-based steps. Currently, the OCR engine used is ABBYY.

For example:

// Configure OCR settings to switch from the AI OCR engine and languages to the ones used in property-based steps

AiRunSettings.updateOCRSettings((new AiOCRSettings()).setOCRType(OCRType.FT))For details, see the Java, JavaScript, and .NET SDK References.

Identifying objects by relative location

To run a step on an object, the object must be identified uniquely. When multiple objects match your object description, you can add the object location to provide a unique identification. The location can be ordinal, relative to similar objects in the application, or proximal, relative to a different AI object, considered an anchor.

A proximal location may help create more stable tests that can withstand predictable changes to the application's user interface. Use anchor objects that are expected to maintain the same relative location to your object over time, even if the user interface design changes.

In your object's description, include the Locator details, such as position or relation. You cannot describe an object by using both position and relation.

To describe an object's ordinal location

Provide the following information:

-

The object's occurrence. For example, 0, 1, and 2, for first, second, and third.

-

The orientation, the direction in which to count occurrences:

FromLeft,FromRight,FromTop,FromBottom.

For example, the following code snippet clicks the first twitter image from the right in your application.

browser.describe(AiObject.class, new AiObjectDescription.Builder()

.aiClass(com.hp.lft.sdk.ai.AiTypes.TWITTER)

.locator(new com.hp.lft.sdk.ai.Position(com.hp.lft.sdk.ai.Direction.FROM_RIGHT, 0)).build()).click();To describe an object's location in proximity to a different AI object

Provide the following information:

-

The description of the anchor object.

The anchor must be an AI object that belongs to the same context as the object you are describing.

The anchor can also be described by its location.

-

The direction of the anchor object compared to the object you are describing:

WithAnchorOnLeft,WithAnchorOnRight,WithAnchorAbove,WithAnchorBelow.

The object returned is the AI object that matches the description and is closest and most aligned with the anchor, in the specified direction.

Note: When you identify an object by a relative location, the application size must remain unchanged in order to successfully run the test script.

For example, this test clicks on the download button to the right of the Linux text, below the Latest Edition text block:

AiObject latestEdition = browser.describe(AiObject.class, new AiObjectDescription(com.hp.lft.sdk.ai.AiTypes.TEXT_BLOCK, "Latest Edition"));

AiObject linuxUnderLatestEdition = browser.describe(AiObject.class, new AiObjectDescription.Builder()

.aiClass(com.hp.lft.sdk.ai.AiTypes.TEXT)

.text("Linux")

.locator(new com.hp.lft.sdk.ai.Relation(com.hp.lft.sdk.ai.RelationType.WITH_ANCHOR_ABOVE, latestEdition

)).build());

AiObject downloadButton = browser.describe(AiObject.class, new AiObjectDescription.Builder()

.aiClass(com.hp.lft.sdk.ai.AiTypes.BUTTON)

.text("download")

.locator(new com.hp.lft.sdk.ai.Relation(com.hp.lft.sdk.ai.RelationType.WITH_ANCHOR_ON_LEFT, linuxUnderLatestEdition

)).build());

downloadButton.click();For details, see the Locator Class in the Java, .NET, and JavaScript SDK references.

Describe a control using an image

If your application includes a control type that is not supported by AI object identification, you can provide an image of the control that can be used to identify the control. Specify the class name that you want to use for this control type by registering it as a custom class.

After you register a custom class, you can use it in AIUtil steps as a control type.

public void test() throws GeneralLeanFtException, IOException {

Browser browser = BrowserFactory.launch(BrowserType.CHROME);

browser.navigate("https://www.advantageonlineshopping.com/#/");

AiUtil.registerCustomClass ("MyClass", Path.of("C:\Users\MyUser\Pictures\MyImage.PNG"));

AiObject aiObject = browser.describe(AiObject.class new AiObjectDescription("MyClass");

AiObject.Click();

}You can even use a registered custom class to describe an anchor object, which is then used to identify other objects in its proximity.

Note:

-

You cannot use a registered class as an anchor to identify another registered class by proximity.

-

The image you use to describe the control must match the control exactly.

-

When running tests on a remote machine, the image used to describe the control must be located on the machine running the test.

For more details, see the AIUtil.RegisterCustomClass method in the Java, .NET, and JavaScript SDK References.

Automatic scrolling

When running a test, if the object is not displayed in the application but the web page or mobile app is scrollable, OpenText Functional Testing for Developers automatically scrolls further in search of the object. After an object matching the description is identified, no further scrolling is performed. Identical objects displayed in subsequent application pages or screens will not be found.

By default, OpenText Functional Testing for Developers scrolls down twice. You can customize the direction of the scroll and the maximum number of scrolls to perform, or deactivate scrolling if necessary.

-

Globally customize the scrolling in the AI tab of the OpenText Functional Testing for Developers runtime engine settings. For details, see AI Object Detection Settings.

-

Customize the scrolling temporarily within a test run by adding AI run settings steps to your test. For details, see the AIRunSettings Class in the Java, .NET, and JavaScript SDK references.

For example:

Copy code// Configure autoscroll settings to enable scrolling up

AiRunSettings.updateAutoScrollSettings((new AiAutoScrollSettings()).enable(ScrollDirection.UP, 10));

See also:

See also:

- AI-based testing

- The Java, .NET, and JavaScript SDK references