Failure results

The following flow demonstrates how to get a list of application modules whose automated tests failed in the last 24 hours.

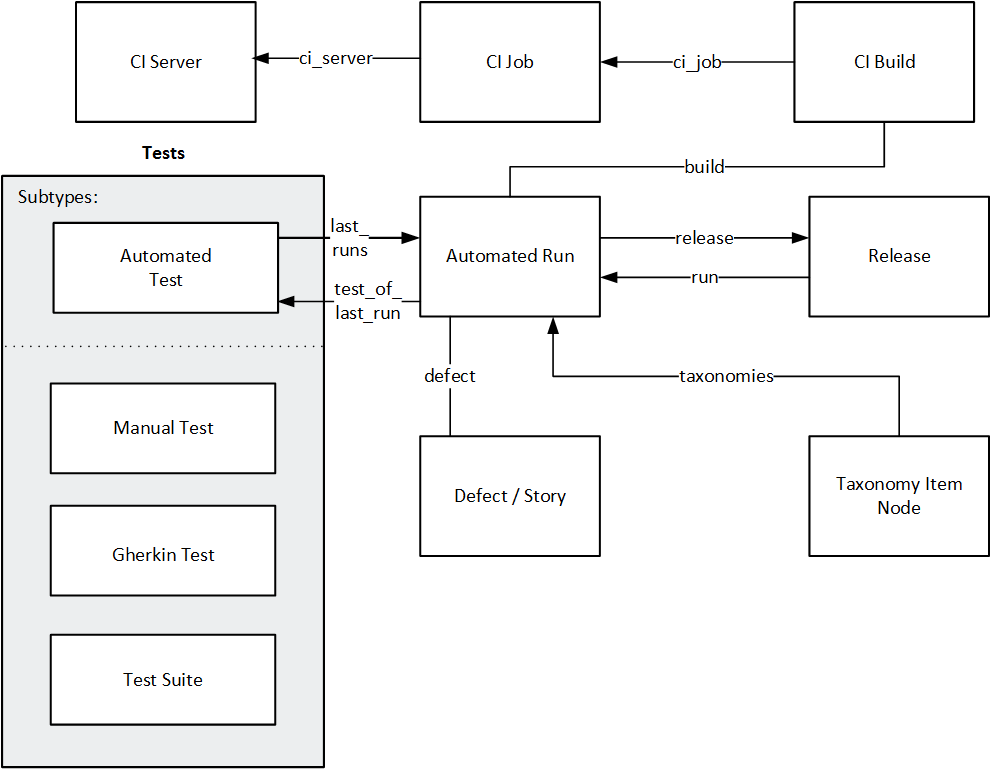

Entity relationship diagram

We need to access the following entities for this flow and understand the relationships between these entities.

The following shows the relations in the flow.

| Entity | Relationships in this flow | Description of relationship | Reference / relationship fields |

|---|---|---|---|

| CI Server | CI Job | Multiple jobs can be defined for each CI server. | ci_server |

| CI Build | CI Job |

Jobs are associated with builds. Each build can contain one or more jobs. |

ci_job |

| Automated Run | CI Build | Builds contain automated tests (jobs). | build |

| Automated Run | Defect / Story | Runs can be associated with defects and stories. | defect |

| Automated Run | Release | Runs can be associated with releases. | run |

| Automated Run | Taxonomy Item Node | Runs can be associated with specific environments (taxonomies), such as operating systems or browsers. | taxonomies |

| Test | None | The test entity represents types of tests, including manual, Gherkin, and automated. | subtype |

| Automated Test | Test | The automated test is a subtype of the test entity. | type |

| Automated Test | Automated Run |

Each automated test can have 0 or more runs associated with it. |

last_runs |

Flow

After authenticating, do the following:

Report test results, including the reference to the relevant CI server, job and build:

GET .../api/shared_spaces/<space_id>/workspaces/<workspace_id>//test-results

Payload:

<?xml version='1.0' encoding='UTF-8'?>

<test_result>

<build server_id="uuid"

job_id="junit-job"

job_name="junit-job"

build_id="1"

build_name="1"/>

<release name="MyRelease"/>

<backlog_items>

<backlog_item_ref id="1011"/>

</backlog_items>

<product_areas>

<product_area_ref id="1003"/>

</product_areas>

<test_fields>

<test_field_ref id="1005"/>

</test_fields>

<environment>

<taxonomy_ref id="1004"/>

</environment>

<test_runs>

<test_run module="/helloWorld" package="hello"

class="HelloWorldTest" name="testOne" duration="3"

status="Passed" started="1430919295889">

<release_ref id="1004"/>

</test_run>

<test_run module="/helloWorld" package="hello"

class="HelloWorldTest" name="testTwo" duration="2"

status="Failed" started="1430919316223">

<product_areas>

<product_area_ref id="1007"/>

<product_area_ref id="1008"/>

<error type="java.lang.NullPointerException" message="nullPointer Exception">at com.mqm.testbox.parser.TestResultUnmarshallerITCase.assertTestRun(TestResultUnmarshallerITCase.java:298) at com.mqm.testbox.parser.TestResultUnmarshallerITCase.assertTestResultEquals(TestResultUnmarshallerITCase.java:281) at com.mqm.testbox.parser.TestResultUnmarshallerITCase.testUnmarshall(TestResultUnmarshallerITCase.java:65)</error>

</product_areas>

</test_run>

<test_run module="/helloWorld" package="hello"

class="HelloWorldTest" name="testThree" duration="4"

status="Skipped" started="1430919319624">

<test_fields>

<test_field_ref id="1006"/>

<test_field type="OpenText.qc.test-new-type" value="qc.test-new-type.acceptance"/>

</test_fields>

</test_run>

<test_run module="/helloWorld2" package="hello"

class="HelloWorld2Test" name="testOnce" duration="2"

status="Passed" started="1430919322988">

<environment>

<taxonomy_ref id="1008"/>

<taxonomy type="OS" value="Linux"/>

</environment>

</test_run>

<test_run module="/helloWorld2" package="hello"

class="HelloWorld2Test" name="testDoce" duration="3"

status="Passed" started="1430919326351">

<backlog_items>

<backlog_item_ref id="1012"/>

</backlog_items>

</test_run>

</test_runs>

</test_result>

For details, see Add automated test results and Test results.

See also:

See also: