Create manual tests

This topic describes how to create manual tests and parameterize their steps with data sets.

Create tests

This sections describes how to create a manual test. You can create tests from the Quality, Team Backlog, and Requirement modules, either from the Tests page or from a Test Suite.

To create a manual test:

-

Create a test in one of the following ways:

- In the Team Backlog or Quality module menus, open the Tests tab. Click + Manual Test. If this option is not visible, expand the list and select + Manual Test.

- In the Requirements module menu, open the Tests tab and select a Requirements document. Click + Manual Test. If this option is not visible, expand the list and select + Manual Test.

- Inside a Test Suite, open the Planning tab. Click + Manual Test. If this option is not visible, expand the list and select + Manual Test.

-

In the Add Manual Test dialog box, assign test attributes.

Field Details Name The name of the test. Test type The type of test, such as acceptance, end-to-end, regression, sanity, security, and performance. Estimated duration (minutes) The time it takes to run the test. Backlog coverage The backlog items that the test covers. This helps you track the release quality. Application modules The product's application modules. This helps you track product quality, regardless of release. Description A textual description of the test. -

Click Add & Edit. The test is created, and the Steps tab of the test opens.

-

Add steps to the test. For details, see Add steps to a test.

-

Save the test.

Tip: While you work, save and label versions of your manual test. As you work with the test over time, compare versions to view modifications. For details, see Test script versioning.

Suggest and generate manual tests

The AI-powered Aviator can suggest manual tests for a feature and create new manual tests based on the suggestions. Aviator uses context from the feature, such as the feature description, backlog items, and existing tests, to suggest relevant tests.

Prerequisites:

To generate test suggestions, you must have the following:

-

An Aviator license. For details, see Licenses.

-

A role with the AI: Suggest tests permission. For details, see Roles and permissions.

To generate suggested tests for a feature using Aviator:

- Open the feature's Tests tab.

- Click the Generate tests with Aviator button

.

. -

Review the suggested tests, and click to select the tests you want to create.

If you do not want to use any of the suggested tests, you can generate different suggestions by clicking Dismiss all and generate new ideas.

- Click Add selected tests. New manual tests are created for the feature based on the suggestions you selected.

- For each new manual test, open the test and add the required steps. For details, see Add steps to a test.

Tip: For items generated by Aviator, the AI suggested field is set to Yes. You can use this field to track which items were created by Aviator.

Generate tests based on video

The AI-powered Aviator Smart Assistant can generate manual tests for a feature based on a video recording, with test steps based on the actions performed in the video. For details, see Aviator Smart Assistant.

Add steps to a test

After you have created a test, you add steps that describe how to set up and validate the application.

To add steps to a test:

-

Select the test's Steps tab.

-

On the toolbar, select a view. The underlying test steps and syntax remain the same in all views. You can switch between views at any time.

View Details Grid view

View the steps as a grid. Each row of the grid includes an Action column with a setup step, and an Expected result column with a corresponding validation step. List view

View the steps as a list. Each line of the list includes one step: a setup step, validation step, or call step. Text view

View the steps in plain text. An admin can set the default script view for new users using the MANUAL_TEST_SCRIPT_DEFAULT_VIEW parameter. For details, see Configuration parameters.

-

Add any of the following step types.

Make sure to use the correct syntax. For details, see Manual test syntax.

Step type Details Step

An action to take in the application to set up the test scenario.

Enter the step text.

Example:

Create a new Epic.Validation Step

Something to check in the application.

Enter the validation step text.

Example:

Verify that the current phase is New.During the test run, specify a pass or fail status for each validation step.

Call Step

Runs another test from within the current test.

In the Add Tests dialog box, select the test or tests that you want to call, and then click Add.

The step is added as a hyperlink to the original test. Click the View test steps button

to display the steps of the test that you called.

to display the steps of the test that you called.Note: The latest version of the called test is used at run time, and not the latest version at the time of planning.

Note: The Number of steps field in the test's Details tab is a sum of steps of all types, including validation and call steps.

- To apply text formatting, see Format text in the test script.

-

To add attachments, select a step and click the Attach button

. You can also paste an image directly into the test.

. You can also paste an image directly into the test.When viewing the steps in text view, the attachment appears in the line under the associated step.

You can view the file in the test's Attachments tab, in the Manual Runner when starting a test run, or in the test run report.

Note: If you rename a test's attachment after it is included in a step, you must manually update the link to the attachment in the step.

Use parameters in tests

You can use parameters in tests to run a single test several times with different values.

To use parameters you must create a data table, define the parameters, and create at least one data set with values. The number of rows in your data set determines the number of iterations the test will run.

If you create multiple data sets, when you run the test, select the data set with which to run the test.

Multiple data sets are useful when you want your test to reflect numerous scenarios. For example:

- To test log in success and failure, create two data sets: one with valid credentials, and another with credential that will fail to log in.

- You can create a different data set for each environment you want to test, such as different browsers or different operating systems.

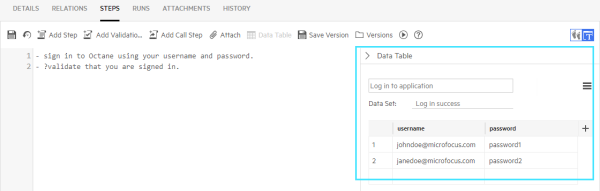

Here's an example showing a list of users and passwords. Each iteration uses a different set of values:

Data tables are shared, so you can use a single data table for multiple tests.

To work with parameters:

-

Open the test's Steps tab, and click Data Table.

-

In the Data table pane that opens, click Create a new table.

-

Enter a name for the table and rename the parameters. You can add or remove columns as required.

To add or remove rows or columns, click the arrow next to the row or column name. The arrow is visible when you hover over a cell or column header.

Parameter names cannot contain empty spaces or angle brackets (< >).

-

In each row, enter a set of parameter values.

-

To add data sets, click the menu and then click Add Set. Enter parameter values for this set.

The first data set in the data table is always the default data set. When a data set is not explicitly selected in the run, the default data set is used.

The names of the sheets in the import file will be the names of the target data sets. You can rename data sets using the menu commands after import.

-

You can use the Menu options

to manage your parameter tables. If you select Remove from test, you disconnect the table from the current test but it is still saved. If you select Delete table, it is removed from all tests that are using this parameter table. The tests that use the table are displayed before you delete the table.

to manage your parameter tables. If you select Remove from test, you disconnect the table from the current test but it is still saved. If you select Delete table, it is removed from all tests that are using this parameter table. The tests that use the table are displayed before you delete the table. -

In the relevant test step, enter parameter names using the following syntax: <parameter name>.

Tip: You can access the list of parameters also by pressing

CTRL+SPACE. -

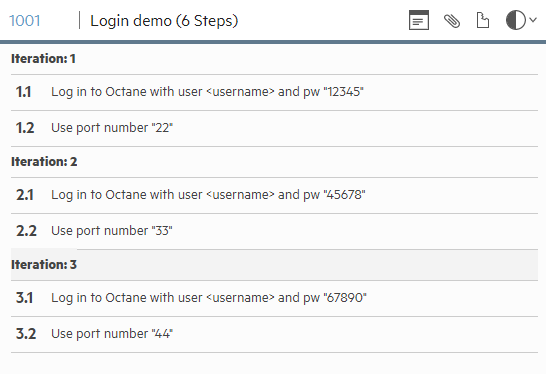

When you run the test, it runs in multiple iterations. Each iteration uses a different set of parameter values from the table.

For example, here are run steps displayed to the tester:

Export and import data tables or data sets

You can export data tables and data sets to Excel. You can update the Excel file, and then import it back to your manual test.

For each data set, consider the following requirements:

- Must have a unique name

- Must not exceed 31 characters

- Cannot include special characters (for example

\ / * [ ] :?)

To export a data table:

- Open the test's Steps tab, and click Data Table.

- Select Export table. The data table opens in Excel. Each data set is displayed in a separate Excel sheet.

To export a data set:

- Open the test's Steps tab, and click Data Table.

- Select a Data Set.

- Select Export set. The data set opens in Excel.

To import a data table:

- Open the test's Steps tab, and click Data Table.

- Select Import table. The Import Data Table dialog box opens.

- Select Override the content of the current data table or Create new data table and link to current test.

-

If you select Override the content of the current data table, click Browse. Locate the data table. Make sure that the table name matches the table name in the Excel file from which you are importing. Click Import. Each sheet is read and the content is either overridden or data sets are added.

-

If you select Create new data table and link to current test, click Import. A new data table is created with at least one data set and links to the current test.

Note: If you have unsaved changes and you create a new data table, your changes are lost.

To import a data set:

- Open the test's Steps tab, and click Data Table.

- Select a Data Set.

- Select Import Set. The Import Data Set dialog box opens.

- Select whether to Override the content of the selected data set or Create new data set under the current table.

- If you select Override the content of the selected data set, click browse. Locate the data table. Click Import. The first sheet is loaded and the values are copied into the selected data set.

-

If you select Create new data set under the current table, click Import. A new data set is created and placed under the current data table.

Copy tests to another workspace

You can copy tests from one workspace to another, within the same shared space.

For details, see Copy items to another workspace.

Next steps:

Next steps: