Run manual and Gherkin tests

This topic describes how to plan test runs, and run them within ALM Octane using the Manual Runner.

Plan a test run

Planning a test run is optional. It enables you to organize your tests and run them without any further need to configure them. If you do not plan a test, ALM Octane prompts you for the same details when you begin the run.

Tip: A common practice is to add tests to a test suite and run the entire test suite. You can add the same test to a suite multiple times, using different environments and execution parameters. For details, see Plan and run test suites.

To plan test runs:

- Go to Tests in the Quality or Backlog module.

- Select the check box for one or multiple tests in the grid. You can include both manual and Gherkin tests.

- Click Plan Run

.

. - Provide values to the fields in the Plan Run dialog box. You must select a release. You can also choose the release version. For details, see Run with latest version.

- Click Plan. ALM Octane stores your configuration, allowing you to run the test in the future without having to configure it. For details, see Run tests.

How ALM Octane runs tests

Depending on the test type, the Manual Runner runs tests differently:

- When running a manual test, view the test steps and add details on the steps. For each validation step, assign a run status. After the test run, ALM Octane creates a compiled status.

- When running a Gherkin test, view scenarios. For each scenario, assign a run status and assign one for the test.

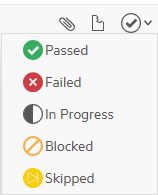

Note: The run statuses you assign when running manual tests (including Gherkin tests and test suites) are called native statuses. When analyzing test run results in the Dashboard or Overview tabs, ALM Octane categorizes the native statuses into the summary statuses Passed, Failed, Requires attention, Skipped, and Planned for clarity of analysis.

Run tests

You run manual tests or Gherkin tests from ALM Octane.

Tip: You can also run manual tests using:

-

Sprinter, the (Undefined variable: _rscB_Branding_Variables._rsc_Copyright_Name) tool for designing and running manual tests. For details, see Run manual tests in Sprinter.

-

A custom manual runner, such as one created with Python in GitLab. For details, see the Idea Exchange.

To run tests in ALM Octane:

-

In the backlog or in the Quality module, select the tests to run.

-

In the toolbar, click Run

.

.The Run tests dialog box opens, indicating how many tests you are about to run. If you select tests that have been planned, the Run tests dialog box indicates this. Specify the test run details:

Field Details Run name A name for the manual run entity created when running a test.

By default, the run name is the test name. Provide a different name if necessary.

Release The product release version to which the test run is associated.

The release is set by default according to the following hierarchy:

- The release in the context filter, provided a single release is selected, and the release is active. If this does not apply:

- The release in the sidebar filter, provided a single release is selected, and the release is active. If this does not apply:

- The last selected release, provided the release active. If this does not apply:

- The current default release.

Milestone (Optional) When you select a milestone, it means that the run contributes to the quality status of the milestone. A unique last run is created and assigned to the milestone for coverage and tracking purposes, similar to release.

Sprint (Optional) When you select a sprint, it means that the run is planned to be executed in the time frame of the sprint. Sprints do not have their own unique run results, so filtering Last Runs by Sprint returns only runs that were not overridden in a later sprint.

To see full run status and progress reports based on sprints, use the Test run history (manual) item type instead of using Last Runs in the Dashboard widgets.

Backlog Coverage (Optional) The backlog items that the run covers. For details, see Test specific backlog items.

Program (Optional) If you are working with programs, you can select a program to associate with the test run. For details, see Programs.

When you run a test associated with a program, you can select a release connected to the program, or a release that is not associated with any program.

The program is set by default according to the following hierarchy:

- Business rule population. If this does not apply:

- The program in the context filter, provided a single program is selected or only one program exists. If this does not apply:

- The program populated in the test.

Note: After you run tests, the Tests tab shows the tests that are connected to the program selected in the context filter, or with runs that ran on this program.

Environment tags The environment tags to associate with the test run.

By default, ALM Octane uses the last selected environment tags.

Select other environment tags as necessary.

Script version Select Use a version from another release to run a test using a script version from a different release. Any tests linked to a manual test from a Add Call Step use the same specified version.

- Note:

-

If there are multiple versions in the selected release, ALM Octane uses the latest version in the release.

-

If there is no version in the selected release, ALM Octane uses the absolute latest version.

Data Set Select the data set to use when running the test. When you run a test with a data set, the test is run in multiple iterations, with each iteration using different values from the data set in place of predefined parameters. For details, see Use parameters in tests.

When running manual tests with multiple iterations, you can generate iteration-level reports, so that each iteration is counted separately as a run. For details, see Generate iteration-level reports.

Draft run Select Draft run if you are still designing the test but want to run existing steps. After the run is complete, ALM Octane ignores the results.

-

Click Let's Run! to run the tests.

The Manual Runner opens.

Tip: During the test run, click the Run Details button

to add run details.

to add run details.Using the buttons in the upper-right corner, you can switch between the following Manual Runner views:

View Description  Vertical view

Vertical viewDisplays the test steps as a list. The notes you make appear below each step.  Side-by-side view

Side-by-side viewDisplays the test steps in the left column. The notes you make appear in the right column. -

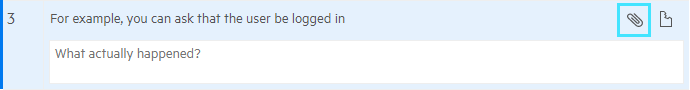

Perform the steps as described in the step or scenario. For each step or scenario, record your results in the What actually happened? field.

-

Where relevant, assign statuses to the steps.

The status of the test is automatically updated according to the status you assigned to the steps. You can override this status manually.

-

If you stop a test in the middle and then click Run again, this continues the existing run and does not reset previous statuses. If you need to start from scratch, duplicate the run and run the new run instead.

Modify a run's test type

By default, a run inherits its Test Type value from the test or suite's test type.

You can modify this value for the run, so that, for example, if a test is End to End and you execute it as part of a regression cycle, you can choose Regression as the run's test type.

Note: The ability to modify a run's test type is defined by the site parameter EDITABLE_TEST_TYPE_FOR_RUN (default true).

Report defects during a run

If you encounter problems when running a test, open defects or link existing defects.

To add defects during a test run:

-

In the Manual Runner toolbar, click the Test Defects button

. If no defects were assigned to this run, clicking Test Defects opens the Add run defect dialog box.

. If no defects were assigned to this run, clicking Test Defects opens the Add run defect dialog box.If you want to associate the defect with a specific step, hover over it and click Add Defect

to open the Add run step defect dialog box.

to open the Add run step defect dialog box. -

In the Add run (step) defect dialog box, fill in the relevant details for the defect. Use the buttons on the ribbon to add more information to the defect:

Button Details  Link to defect

Link to defectSelect the related existing defect(s), and click Select.  Attach

AttachBrowse for attachments. For details, see Add attachments to a run or step.  Copy steps to description

Copy steps to descriptionClick to add the test run steps to the defect's Description field. This helps you reproduce the problem when handling the defect.

-

In a manual test, if the defect is connected to a run, all the run's steps are copied. If the defect is connected to a run step, the steps preceding the failed step are copied. If there are parameters in the test, only the relevant iteration is copied.

-

In a Gherkin test, the relevant scenario is copied.

/

/  Customize fields

Customize fieldsSelect the fields to show. A colored circle indicates that the fields have been customized. For details, see Forms and templates. -

-

Several fields in the new defect form are automatically populated with values from the run and test, such as Application module, Environment tags, Milestone, and Sprint. For details, see Auto-populated fields.

-

Click Test Defects

to view a list of the defects for this run and test.

to view a list of the defects for this run and test.

Add attachments to a run or step

It is sometimes useful to attach documentation to the run.

To attach items to a run or a step in a run:

-

Do one of the following, based on where you need to attach information:

-

To attach to a test run: In the toolbar, click the Run Attachments button

.

. -

To attach to single step: In the step, next to the What actually happened field, hover and click the Add attachments button:

In the dialog, attach the necessary files.

Tip: Drag and drop from a folder or paste an image into the Manual Runner.

-

ALM Octane updates the Manual Runner to show the number of attached files.

Edit run step descriptions during execution

You can edit run step descriptions during execution, and choose whether to apply changes to the test as well.

To edit descriptions during test execution:

-

In the toolbar, click the Edit Description button

.

. -

Edit the description as needed, and then continue with the test execution.

-

When you click Stop or move between test runs in a suite, a dialog enables you to save the edited description to future runs, or only apply them to the current run.

If a test contains parameters, or a step is called from a different test, you cannot save the edited description to the test. In this case, an alert icon to the right of the description indicates that the edited description applies to the current run only.

Similarly, you can edit a run step description during Gherkin test execution, but the modification cannot be saved to the test. It is only apply to the current run.

End the run and view its results

After performing all steps, at the bottom of the Manual Runner window, click  .

.

Run timer: If your workspace is set up to automatically measure the duration of a test run, the timer stops when you click the stop button, or close the Manual Runner window.

If you exit the system without stopping the run, the test run duration is not measured correctly.

If you have not marked all steps or validation steps, the run status is still listed as In Progress. Finish entering and updating step details to change the status.

In the Runs tab of the test, click the link for your specific run and view the results in the Report tab.

The report displays step-by-step results, including the results of all validation steps, and any attachments included in the run.

Note: The report displays up to 1000 test steps. You can use the Rest API to retrieve more records. For details, see Create a manual test run report.

Run timer

You can measure the duration of manual or Gherkin test runs automatically. The timer starts when the Manual Runner window is launched, and stops when the run ends of the Manual Runner window is closed.

The timing is stored in the test run's Duration field.

The automatic timing option depends on a site or shared space parameter: AUTOMATIC_TIMING_OF_MANUAL_RUN_DURATIONS_TOGGLE. For details, see Configuration parameters.

Edit a completed run

Depending on your role, you have different options for editing completed manual test runs:

| Roles | Details |

|---|---|

| Roles with the Modify Completed Run permission |

You can edit a completed run at any stage. |

| Roles without the Modify Completed Run permission |

You can edit a completed run while the manual runner is still open. One of the following conditions needs to be met:

Note:

|

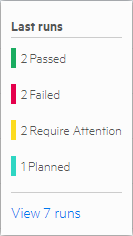

Last runs

You can view the Last runs for each manual or Gherkin test. The information from the last run is the most relevant and can be used to analyze the quality of the areas covered by the test.

To view last runs:

-

In the Tests tab of Requirements, Features, User Stories, or Application Modules, display the Last runs field.

The Last runs field contains a color bar

where each color represents the statuses of the lasts runs.

where each color represents the statuses of the lasts runs. -

Hover over the bar to view a summary of the test run statuses:

- Click the View link to open the details of the last runs in a popup window.

The Last runs field aggregates the last runs of the test in each unique combination of: Release, Milestone, Program, and Environment tags.

Example: If a test was run on Chrome in Milestone A and Milestone B, and on Firefox in Milestone A and Milestone B, the test's Last Runs field represents the last run for each combination, four runs in total.

The executed run that is considered last is the run that started latest.

A planned run is counted as a separate last run, even if its Release, Milestone, Program, and Environment tags match those of an executed run. The planned run is distinguished by its 'Planned' status.

The planned run that is considered last is the planned run that was created latest.

You can filter the last runs according to these test run attributes: Release, Milestone, Program, Environment tags, Status, Run By. The Last Runs field then includes last runs from the selected filter criteria.

Depending on which Tests tab you are in, apply test run filters as follows:

| View | How to apply Last Run filters |

|---|---|

| Requirements > Tests, Backlog > Tests, Team Backlog > Tests, Quality > Tests | Use the Run filters in the right-hand Filter pane. |

| Feature > Tests, User Story > Tests | Using the filter bar |

| Quality > Tests | If you select a program in the context filter, you can see Last Runs only for the selected program. |

The Dashboard module has several widgets based on the last run, such as Last run status by browser and Last run status by application module. For details, see Dashboard.

Exceptions to the calculation of last runs

In some cases, Last runs ignores field filters that were applied to the runs. This applies fields in the Tests tab's grids shown in the Quality and Requirement modules, and the related widgets.

This exception occurs when using an OR condition in cross field filters for runs, where only part of the OR conditions include such a cross filter.

For example, if you have a cross filter (Last runs.Release: ...) OR (Last runs.Status: ...),

then the Runs filters will be applied.

If, however, you have a filter (Last runs.Release: ...) OR (Author ...), then the Runs filters will not be applied. This will result with a larger set of Runs.

Run with latest version

When you plan a manual test run or test suite run (for manual tests), by default the test steps executed are the ones that existed at the time of the planning. If the test steps changed, the new steps are ignored in the upcoming runs.

Using the Run with latest version field, you can instruct ALM Octane to use the latest version of the test.

To use the latest test versions in manual tests:

- In the manual test's page (MT) click the Details tab. To apply the settings to multiple tests, go to the Tests tab, for example in the Quality module, and select multiple tests.

- Click Plan Run.

- In the Plan Run dialog box, select Yes in the Run with latest version field. Click Plan.

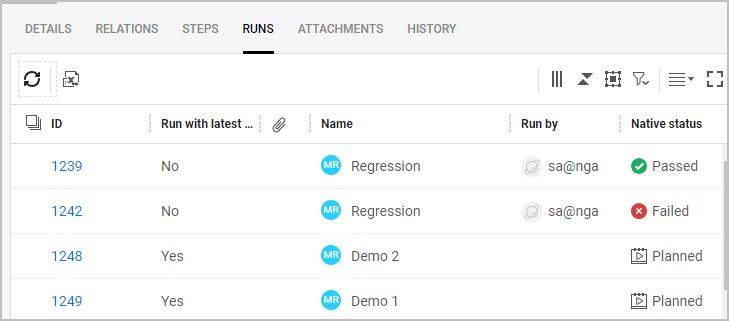

-

Open the test's Runs tab, and show the column Run with latest version, to show the setting for each of the manual runs.

When a test is executed and no longer in Planning status, the Run with latest version field is locked.

To use the latest test versions for a test suite:

- In the test suite's page (TS) click the Planning tab. To apply settings to multiple test suites, go to the Tests tab, for example in the Quality module, and select multiple test suites.

- Click Plan Suite Run.

- In the Plan Suite Run dialog box, select Yes in the Run with latest version field. This sets the default for all manual tests added to the test suite. Click Plan.

- Open the test's Suite Runs tab, and display the column Run with latest version to show the setting for each of the suite runs.

Unified test runs grid

The Runs tab in the Quality module provides a unified grid of runs across all tests, both planned and executed. Testing managers can filter the grid, and do bulk updates on runs across different tests.

Note: By default, the Runs tab is accessible to workspace administrators only. For details on allowing access to other roles, see Roles and permissions.

Display limits

Some filter definitions on the Runs tab can result in a large number of matching runs. The Runs grid will include the first 2000 runs in the filter, according to the sort-by field. Refine the filter to make sure that all the runs you are interested in are included.

The following applies to grids with over 2000 runs:

-

You can sort the grid according to the following fields: ID, Started, Creation time, and Latest pipeline run.

-

If you sort by any other field, the grid will not display any results.

-

No results will display if the grid is grouped.

Test run statuses

Test runs have two status fields: Native status and status.

-

Native status. The test run's actual status. Can be any of the following values: Passed, Failed, Blocked, Skipped, In Progress, Planned

-

Status. A reduced list of test run statuses, used for widgets and coverage reporting. Can be any of the following values: Passed, Failed, Requires Attention, Skipped, Planned

The Status value is derived automatically from the Native status value. The two fields are mapped as follows:

| "Native status" value | Mapped "Status" value |

|---|---|

| Passed | Passed |

| Failed | Failed |

| Blocked | Requires Attention |

| Skipped | Skipped |

| In Progress | Requires Attention |

| Planned | Planned |

Generate iteration-level reports

You can set up dashboard widgets to report on manual runs at the iteration level, so that each iteration with parameters is counted separately as a run.

Gherkin tests: Iteration-level reporting is not available for Gherkin tests, because a Gherkin test can have multiple scenario outlines with different sets of iterations. For iteration-level reporting, use BDD specifications instead. For details, see Create and run BDD scenarios.

Iteration-level reporting is available for the following widgets:

-

Summary graphs: Test run history (manual), Last test runs, Backlog's last test runs

-

Traceability graph: Backlog's last test runs, Test’s last test runs

To enable iteration-level reporting, when configuring a supported widget select the checkbox Count iterations as separate runs.

The status of an iteration is updated automatically based on the status you assign to the steps in that iteration when running the test. If you do not assign a status to the steps, the iteration status will remain Planned. For details, see Run tests.

Version compatibility

-

Runs created in ALM Octane versions earlier than 16.1.200 have an iteration count of 1.

-

Planned runs created in ALM Octane versions earlier than 23.4 have an iteration count of 1.

Content field

Manual tests, Gherkin tests, and test suites include a field called Content. The meaning of this field is dependent on its context, as follows:

| Test entity | Content field indicates... |

|---|---|

| Manual test or manual test run | Number of steps (regardless of examples or iterations) |

| Gherkin test or BDD scenario | Number of scenarios and scenario outlines |

| Test suite | Number of tests |

| Suite run | Number of runs |

See also:

See also: